Meta's Segment Anything Model 2 (SAM 2): Advancing Image and Video Segmentation

Meta's Segment Anything Model (SAM) has made significant advancements in image segmentation, which involves identifying which pixels in an image belong to a specific object. This technology has proven beneficial for various applications, including scientific image analysis and photo editing [1]. SAM's capabilities have inspired new AI-enabled image editing tools within Meta's apps, such as Backdrop and Cutouts on Instagram. Beyond consumer applications, SAM has found use in diverse fields such as marine science, where it segments sonar images for coral reef analysis, satellite imagery analysis for disaster relief, and the medical field, where it assists in segmenting cellular images and detecting skin cancer [2].

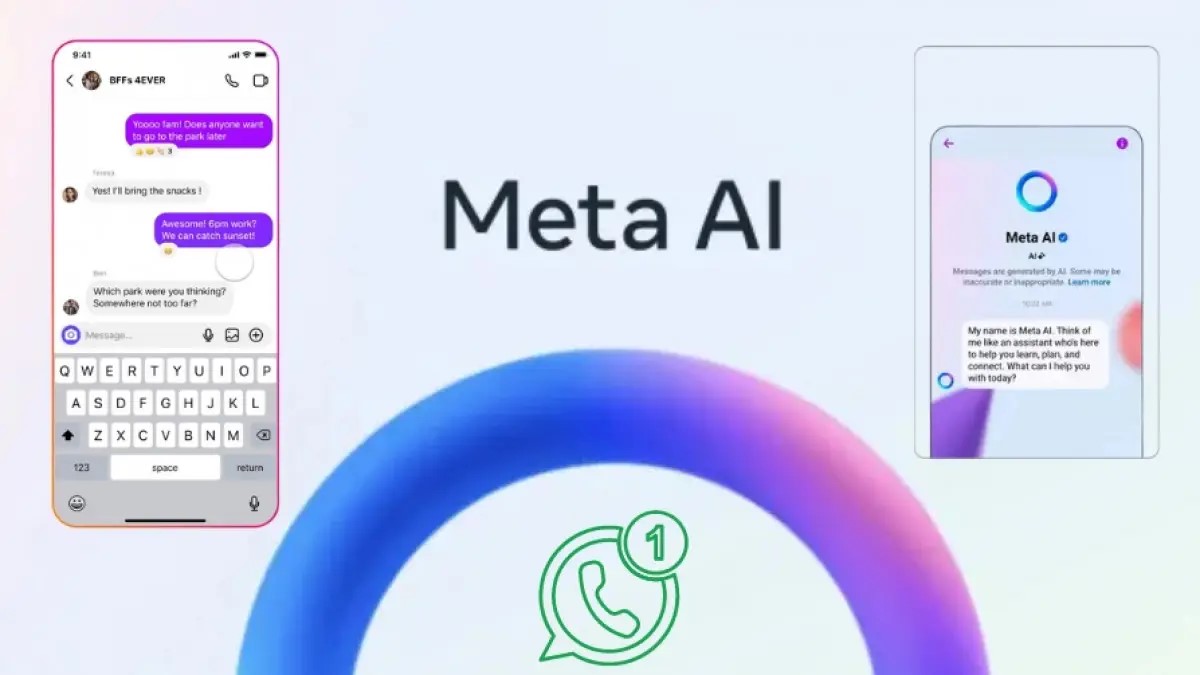

Figure 1. SAM 2 Object Dentification. (Credit: Meta)

Building on the success of SAM, Meta has introduced the Segment Anything Model 2 (SAM 2), which extends these segmentation capabilities to video. SAM 2 can segment any object in an image or video and consistently track it across all video frames in real-time. This marks a significant improvement over existing models, which have struggled with the complexities of video segmentation, such as fast-moving objects, changes in appearance, and occlusions. Meta has addressed these challenges in the development of SAM 2. Figure 1 shows SAM 2 object dentification in a single video frame [3].

SAM 2 opens up new possibilities for easier video editing and generation, as well as new experiences in mixed reality. It can be used to track target objects in videos, facilitating faster annotation of visual data for training computer vision systems, such as those used in autonomous vehicles. Additionally, SAM 2 enables creative ways to select and interact with objects in real-time or live videos.

In line with Meta's commitment to open science, the company is sharing its research on SAM 2 to encourage further exploration of its capabilities and potential use cases. Meta is eager to see how the AI community will leverage this research to drive innovation in various fields.

Source: Meta

References:

- https://www.gadgets360.com/ai/news/meta-segment-anything-model-2-sam2-pixel-identification-images-videos-ai-model-released-6221518

- https://ai.meta.com/blog/segment-anything-2/

- https://www.neowin.net/news/meta-introduces-sam-2-its-next-generation-segment-anything-model-for-videos-and-images/

Cite this article:

Hana M (2024), Meta's Segment Anything Model 2 (SAM 2): Advancing Image and Video Segmentation, AnaTechMaz, pp. 174