Techniques Of Dimensionality Reduction Method

The number of input features, variables, or columns present in a given dataset is known as dimensionality, and the process to reduce these features is called dimensionality reduction.

A dataset contains a huge number of input features in various cases, which makes the predictive modeling task more complicated [1]. Because it is very difficult to visualize or make predictions for the training dataset with a high number of features, for such cases, dimensionality reduction techniques are required to use.

Add to that the different steps involved in data warehouse modernization including creating strategies to ensure that your data warehouse meets availability and scalability requirements, and you’ve got a lot on your plate.

It is commonly used in the fields that deal with high-dimensional data, such as speech recognition, signal processing, bioinformatics, etc. It can also be used for data visualization, noise reduction, cluster analysis

Benefits of Dimension Reduction

Let’s look at the benefits of applying Dimension Reduction process:

- It helps in data compressing and reducing the storage space required

- It fastens the time required for performing same computations. Less dimensions leads to less computing, also less dimensions can allow usage of algorithms unfit for a large number of dimensions

- It takes care of multi-collinearity that improves the model performance. It removes redundant features [2]. For example: there is no point in storing a value in two different units (meters and inches).

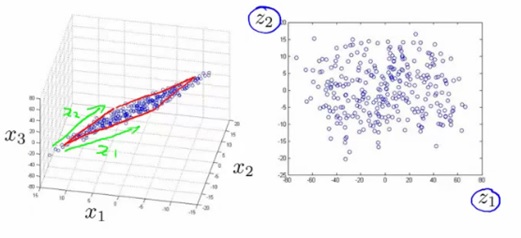

- Reducing the dimensions of data to 2D or 3D may allow us to plot and visualize it precisely. You can then observe patterns more clearly. Below you can see that, how a 3D data is converted into 2D. First it has identified the 2D plane then represented the points on these two new axis z1 and z2.

Wrappers Methods: The wrapper method has the same goal as the filter method, but it takes a machine learning model for its evaluation. In this method, some features are fed to the ML model, and evaluate the performance. The performance decides whether to add those features or remove to increase the accuracy of the model. This method is more accurate than the filtering method but complex to work. Some common techniques of wrapper methods are figure 1shown below.

- Forward Selection

- Backward Selection

- Bi-directional Elimination

Embedded Methods: Embedded methods check the different training iterations of the machine learning model [3] and evaluate the importance of each feature. Some common techniques of Embedded methods are:

- LASSO

- Elastic Net

- Ridge Regression, etc.

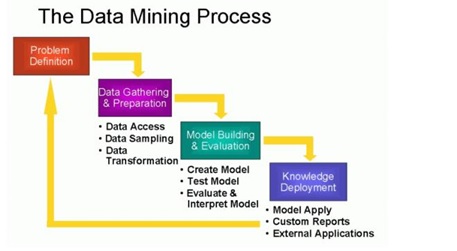

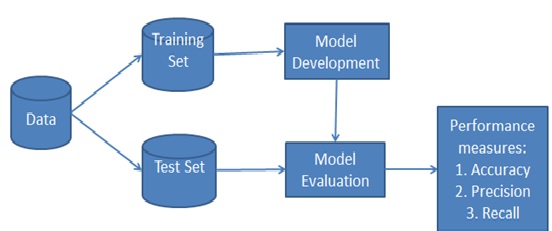

Figure1: Dimension Reduction method

References:

- https://www.geeksforgeeks.org/dimensionality-reduction

- https://machinelearningmastery.com/dimensionality-reduction

- https://www.javatpoint.com/dimensionality-reduction-technique

Cite this article:

S. Nandhinidwaraka (2021) Techniques of Dimensionality Reduction Method, Anatechmaz, pp. 15