Easier Management for Shape-Shifting Robots

Imagine formless 'slime' robots dynamically altering their shape to accomplish intricate tasks—it sounds like something out of science fiction. Yet, MIT researchers have pioneered a machine-learning approach that moves shape-changing soft robots one step closer to actuality.

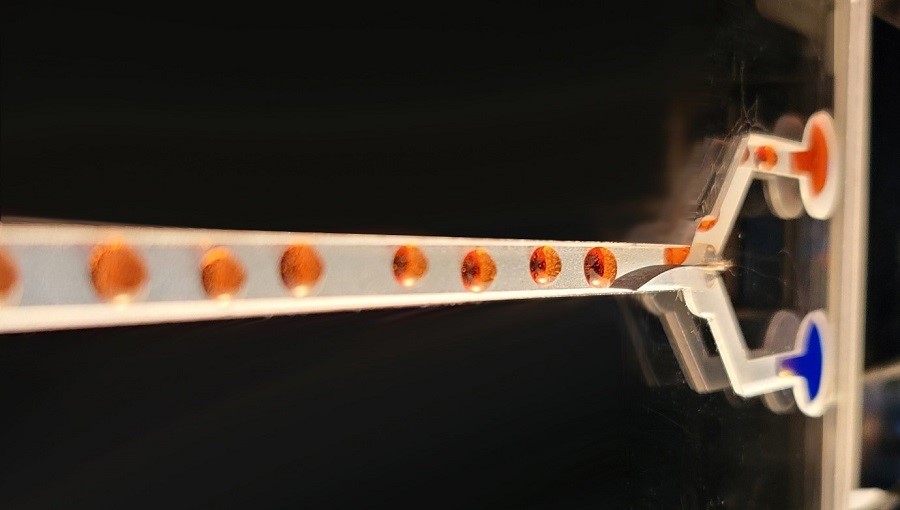

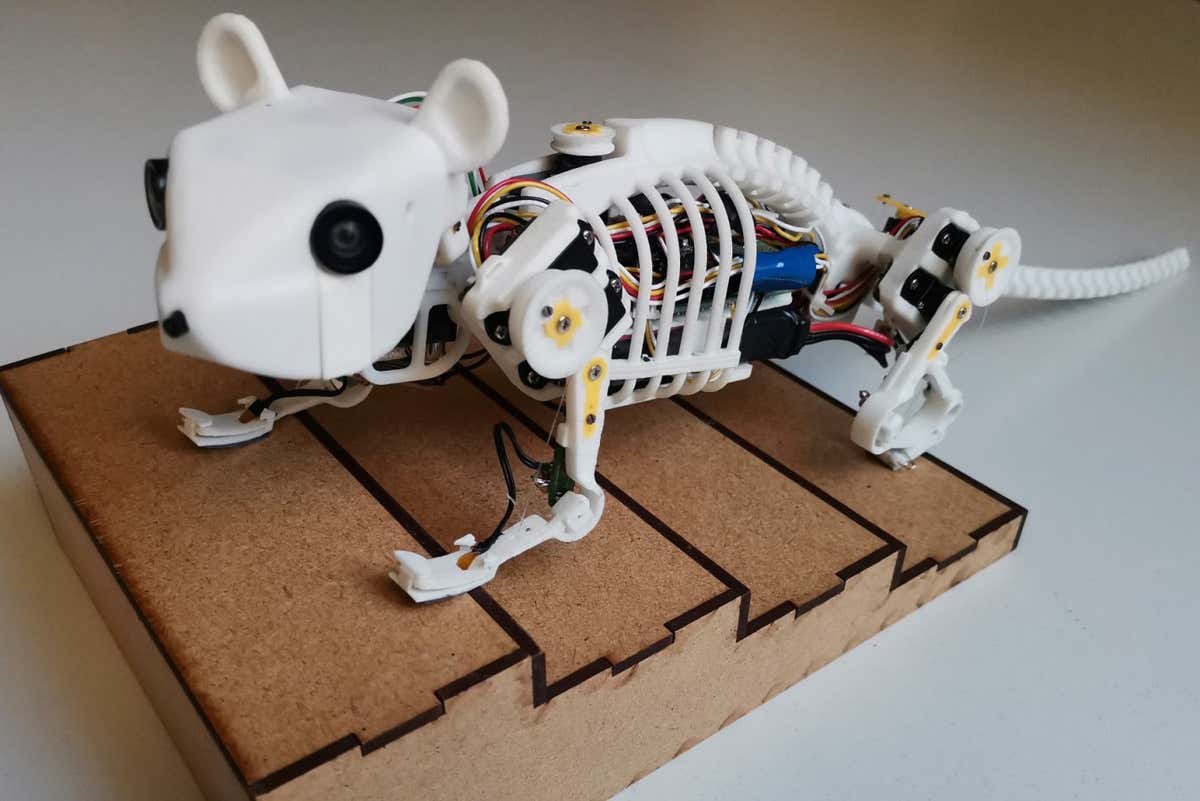

Figure 1. The slime robot can reshape itself as it works, making it exceptionally difficult to control.

Figure 1 shows the slime robot can reshape itself as it works, making it exceptionally difficult to control. In 1991, the world was introduced to the notion of shape-changing robots through the T-1000 character showcased in the iconic film Terminator 2: Judgment Day. Since that time, and possibly even earlier, numerous scientists have harbored aspirations of crafting robots capable of morphing their shapes to execute a wide array of tasks.[1]

In recent times, we are witnessing the emergence of such innovations, such as the "magnetic turd" developed by the Chinese University of Hong Kong and the liquid metal Lego man, which possesses the remarkable ability to melt and reshape itself for jailbreak scenarios. However, both of these creations rely on external magnetic controls and lack autonomous mobility.

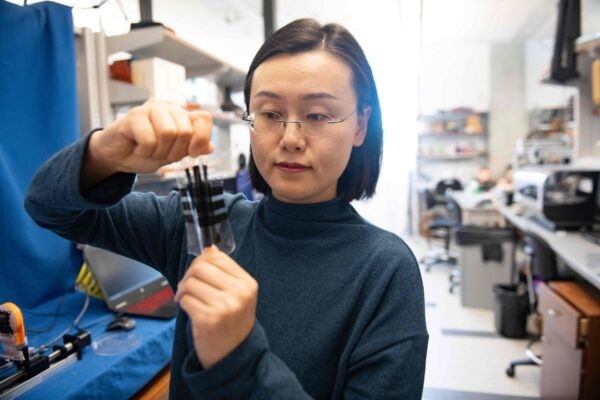

However, a research group at MIT is currently working on prototypes that can move independently. They have devised a machine-learning method that instructs and manages a malleable 'slime' robot, enabling it to compress, flex, and elongate itself for interactions with its surroundings and external objects. On a slightly disappointing note, it's worth mentioning that the robot is not constructed from liquid metal.

Boyuan Chen, a co-author of the study outlining the researchers' work from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), remarked, "When people envision soft robots, they typically imagine robots that are elastic and revert to their initial shape. However, our robot resembles slime and possesses the capability to alter its morphology. It's quite remarkable that our approach yielded such successful results, considering we are dealing with a very novel concept."

The researchers faced the challenge of controlling a slime robot devoid of arms, legs, fingers, or any conventional skeletal structure for muscle manipulation. With no fixed locations for muscle actuators, and an inherently shape-shifting nature, programming movements for such a robot posed a formidable challenge.

Recognizing the inadequacy of standard control schemes, the team opted for an AI-driven approach, capitalizing on its capacity to handle intricate data. They devised a control algorithm capable of learning how to maneuver, elongate, and reshape the amorphous robot, often iterating multiple times to accomplish specific tasks

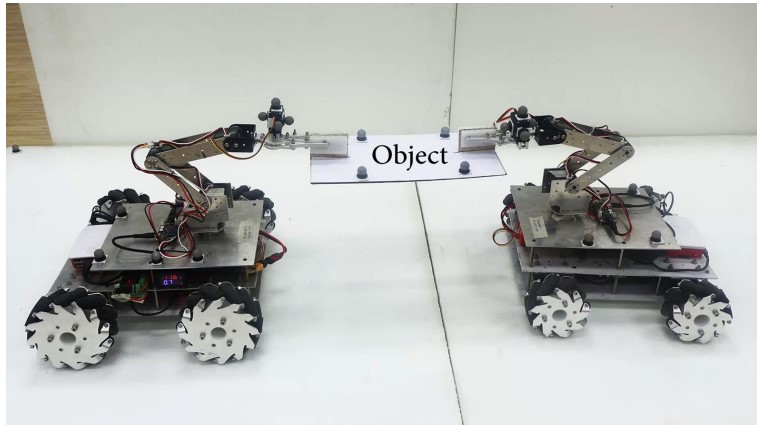

Reinforcement learning, a machine-learning technique, trains software through trial and error to make decisions. It's highly effective for training robots with defined moving components, like grippers with 'fingers,' where actions can be rewarded for progress towards a goal, such as picking up an egg. However, for a shapeless soft robot controlled by magnetic fields, like the one described by Chen, traditional reinforcement learning poses significant challenges due to the multitude of muscle components to manage.

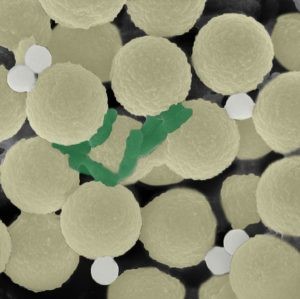

Given that a slime robot necessitates moving large portions of its structure to achieve meaningful shape changes, manipulating individual particles wouldn't suffice. Consequently, the researchers adopted a nontraditional approach to reinforcement learning.

In reinforcement learning, the collection of all permissible actions or choices accessible to an agent during its interaction with an environment is termed an 'action space.' In this case, the action space for the robot was conceptualized as an image composed of pixels. The model utilized images depicting the robot's surroundings to create a 2D action space, which was mapped with points overlaid on a grid.

Just as nearby pixels in an image exhibit correlation, the researchers' algorithm comprehended that neighboring action points possessed stronger associations. Consequently, when the robot underwent shape changes, action points surrounding its 'arm' moved collectively, while those around the 'leg' underwent a distinct yet coordinated movement.

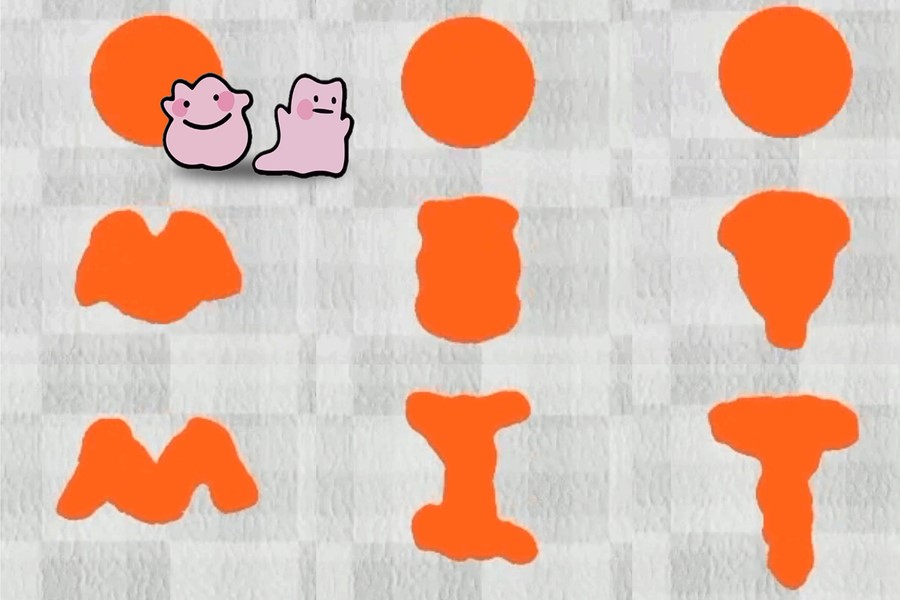

Furthermore, the researchers devised an algorithm employing 'coarse-to-fine policy learning.' Initially, the algorithm was trained using a low-resolution coarse policy, enabling it to explore the action space and discern meaningful action patterns. Subsequently, a higher-resolution fine policy was employed to delve deeper into optimizing the robot's actions and enhancing its proficiency in executing complex tasks.[2]

"Coarse-to-fine implies that when you enact a random action, that action is expected to yield a noticeable impact," explained Vincent Sitzmann, a co-author of the study also affiliated with CSAIL. "Controlling multiple muscles simultaneously at a coarse level significantly alters the outcome."

The subsequent step involved testing their methodology. They developed a simulation environment named DittoGym, comprising eight tasks designed to assess the proficiency of a reconfigurable robot in altering its shape. These tasks include matching a letter or symbol, as well as tasks involving growth, digging, kicking, catching, and running.

"Our task selection in DittoGym adheres to both the generic design principles of reinforcement learning benchmarks and the specific requirements of reconfigurable robots," explained Suning Huang, a study co-author from the Department of Automation at Tsinghua University, China, and a visiting researcher at MIT.

"Each task is meticulously crafted to embody certain essential properties, such as the capacity for long-horizon explorations, environmental analysis, and interaction with external objects," Huang elaborated. "We believe that collectively, these tasks offer users a comprehensive insight into the adaptability of reconfigurable robots and the efficacy of our reinforcement learning approach."

The researchers discovered that, in terms of efficiency, their coarse-to-fine algorithm consistently surpassed alternative approaches (such as coarse-only or fine-from-scratch policies) across all tasks.

While it may take some time before shape-changing robots become commonplace outside the laboratory, this research represents a significant stride forward. The researchers anticipate that it will encourage others to create their own reconfigurable soft robots, which could potentially navigate the human body or be integrated into wearable devices in the future.

Reference:

- https://newatlas.com/robotics/shape-changing-formless-soft-robot/

- https://www.electronicsforu.com/news/simpler-control-for-shape-shifting-robots

Cite this article:

Gokila G (2024), Easier Management for Shape-Shifting Robots, AnaTechMaz, pp. 28