Navigating the Integration of AI in Healthcare: A Framework for Responsible and Ethical Adoption

In the ever-evolving landscape of healthcare, the promise of artificial intelligence (AI) tools to enhance patient care has captured the attention of health organizations worldwide. However, the journey from innovation to practical implementation in clinical settings has proven to be a complex and inconsistent one. In a groundbreaking article published in Patterns, a team of researchers from Carnegie Mellon University, The Hospital for Sick Children, the Dalla Lana School of Public Health, Columbia University, and the University of Toronto present a comprehensive framework for the responsible use of AI in healthcare.

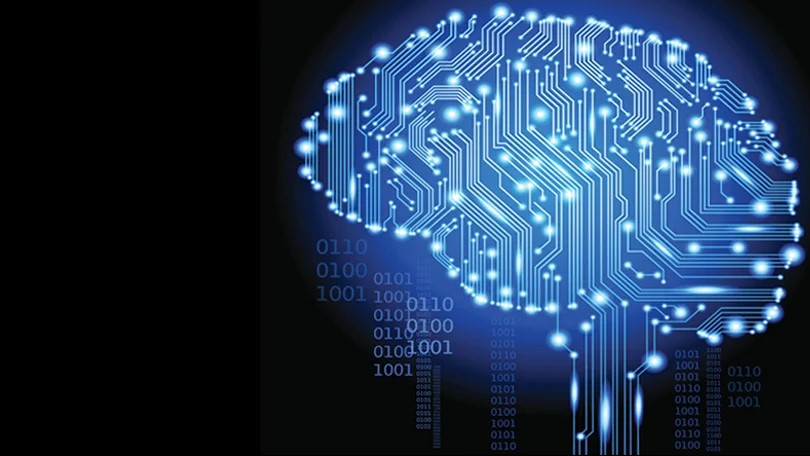

Figure 1. AI in healthcare.

Figure 1 is an illustration of AI in healthcare. The authors, led by Alex John London, K&L Gates Professor of Ethics and Computational Technologies at Carnegie Mellon, recognize the challenges posed by the narrow focus of regulatory guidelines and institutional approaches. These have often centered on the performance metrics of AI tools while neglecting the broader knowledge, practices, and procedures essential for seamlessly integrating these tools into the intricate social systems of medical practice.

London emphasizes the non-neutrality of AI tools, asserting that they inherently reflect the values of their creators. The proposed framework introduces a conceptual shift, treating AI tools as integral components of an "intervention ensemble." This ensemble comprises a holistic set of knowledge, practices, and procedures crucial for delivering optimal care to patients, positioning AI tools as intricate parts of larger sociotechnical systems.

Building on London's prior work, which applied a similar concept to pharmaceuticals and autonomous vehicles, the authors provide a proactive framework that offers practical guidance to designers, funders, and users. This approach aims to ensure the responsible integration of AI systems into healthcare workflows, emphasizing their potential to positively impact patient outcomes.

Unlike previous descriptive approaches that outlined the interaction between AI and human systems, this framework is forward-thinking. It not only serves as a guide for stakeholders but also presents avenues for regulation, institutional insights, and responsible and ethical appraisal and evaluation of AI tools in healthcare settings.

To illustrate the applicability of their framework, the authors delve into the development of AI systems designed for diagnosing diabetic retinopathy beyond mild stages. Melissa McCradden, a Bioethicist at the Hospital for Sick Children and Assistant Professor of Clinical and Public Health at the Dalla Lana School of Public Health, emphasizes the framework's potential to bring precision to evaluations. The hope is that this precision will benefit regulatory bodies seeking the necessary evidence to oversee AI systems effectively.

In conclusion, as healthcare organizations continue to explore the vast potential of AI, this comprehensive framework provides a roadmap for the ethical, responsible, and effective integration of AI tools into the complex fabric of medical practice, ultimately aiming to improve patient outcomes and advance the field of healthcare technology.

Source: Carnegie Mellon University

Cite this article:

Hana M (2023), Navigating the Integration of AI in Healthcare: A Framework for Responsible and Ethical Adoption, AnaTechMaz, pp. 345