Revolutionizing Multimedia Communication: NTU Singapore's AI Breakthrough in Realistic Facial Animations

In a development, a team of researchers from Nanyang Technological University, Singapore (NTU Singapore), has introduced a cutting-edge computer program that breathes life into facial expressions and head movements in videos using just an audio clip and a static face photo.

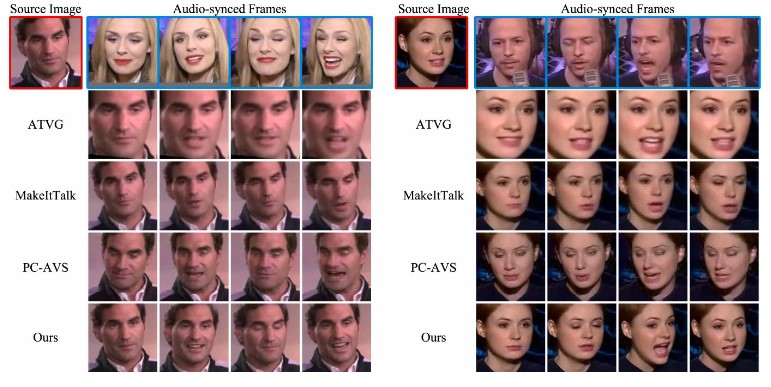

Figure 1. Comparisons of DIRFA with state-of-the-art audio-driven talking face generation approaches.

Figure 1 shows the comparisons of DIRFA with state-of-the-art audio-driven talking face generation approaches. Dubbed Diverse yet Realistic Facial Animations (DIRFA), this artificial intelligence-based program leverages advanced techniques to generate 3D videos featuring individuals exhibiting authentic and consistent facial animations synchronized with spoken audio. Unlike existing methods grappling with pose variations and emotional nuances, DIRFA takes a significant leap forward in creating highly realistic visual content.

The researchers accomplished this feat by training DIRFA on an extensive dataset comprising over one million audiovisual clips from more than 6,000 individuals, sourced from the open-source VoxCeleb2 Dataset. This comprehensive training enabled the program to predict cues from speech and seamlessly associate them with lifelike facial expressions and natural head movements.

The potential applications of DIRFA are vast and extend across various industries, promising innovations in healthcare, virtual assistants, and communication technologies. The program has the capacity to enhance user experiences by enabling more sophisticated and realistic virtual assistants and chatbots. Moreover, it emerges as a powerful tool for individuals with speech or facial disabilities, offering them expressive avatars to convey thoughts and emotions effectively.

Associate Professor Lu Shijian, the corresponding author and leader of the study, envisions a profound impact on multimedia communication, stating, "Our program revolutionizes the realm of multimedia communication by enabling the creation of highly realistic videos of individuals speaking, combining techniques such as AI and machine learning."

First author Dr. Wu Rongliang emphasizes the complexity of the challenge, stating, "Speech exhibits a multitude of variations," and highlights the pioneering efforts of their approach in audio representation learning within AI and machine learning.

Published in the scientific journal Pattern Recognition in August, the study outlines the intricate relationships DIRFA establishes between audio signals and facial animations. The program models the likelihood of specific facial expressions based on input audio, resulting in diverse yet lifelike sequences of facial animations that accurately synchronize with spoken words.

While DIRFA has already demonstrated its capabilities in generating accurate lip movements, vivid facial expressions, and natural head poses, the research team acknowledges the need for further refinement. Ongoing efforts include improving the program's interface to allow users more control over certain outputs and fine-tuning facial expressions with a broader range of datasets.

As NTU Singapore pioneers the convergence of AI and multimedia communication, DIRFA opens new doors for realistic, emotionally nuanced digital interactions, promising a future where technology enhances our ability to connect and communicate.

Source: Nanyang Technological University

Cite this article:

Hana M (2023), Revolutionizing Multimedia Communication: NTU Singapore's AI Breakthrough in Realistic Facial Animations, AnaTechMaz, pp. 334