Robot Masters Surgical Tasks Simply by Watching Videos

While humans require years of rigorous study and precision to perform surgery, robots may learn the skill more easily with today’s AI technology. Researchers at Johns Hopkins University (JHU) and Stanford University have trained a robotic surgical system to perform various surgical tasks with human-level proficiency, simply by showing it videos of those procedures.

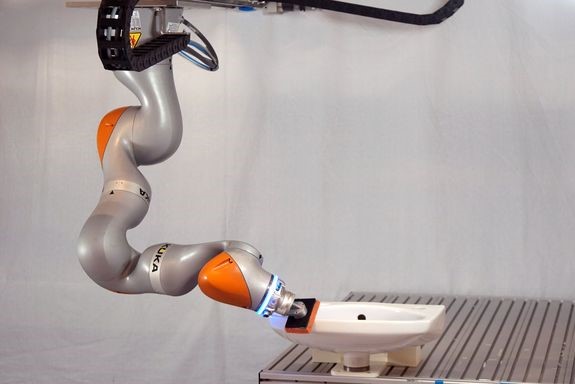

The team used a da Vinci Surgical System for this study. This robotic system, typically controlled remotely by a surgeon, features arms that manipulate instruments for tasks such as dissection, suction, and cutting and sealing vessels. These systems provide surgeons with enhanced control, precision, and a closer view of patients during surgery. The latest version of the system is estimated to cost over US$2 million, not including accessories, sterilizing equipment, or training expenses.

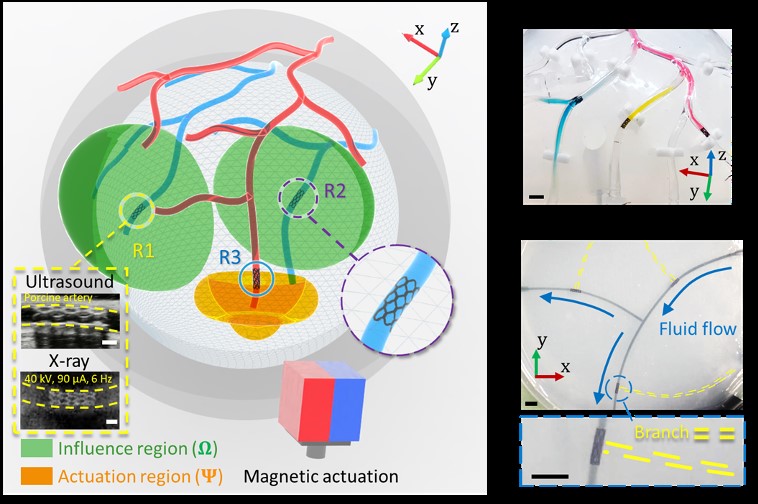

Figure 1. AI-trained Robot System Performs Complex Surgical Tasks with Human-Level Skill

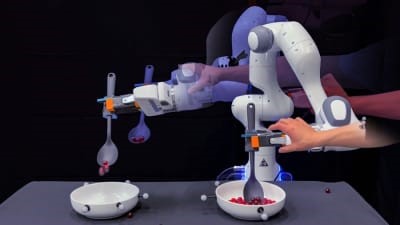

Using a machine learning technique called imitation learning, the team trained a da Vinci Surgical System to autonomously perform three key surgical tasks: manipulating a needle, lifting body tissue, and suturing. Take a look at the results. Figure 1 shows AI-trained Robot System Performs Complex Surgical Tasks with Human-Level Skill.

The surgical system not only performed these tasks as well as a human, but it also learned to correct its own mistakes. "For example, if it drops the needle, it automatically picks it up and continues. This isn’t something I taught it to do," said Axel Krieger, an assistant professor at JHU and co-author of a paper on the team’s findings, which was presented at this week's Conference on Robot Learning.

The researchers trained an AI model by combining imitation learning with the same machine learning architecture used in popular chatbots like ChatGPT. However, unlike chatbots designed to process text, this model generates kinematics—a language that describes motion using mathematical elements like numbers and equations—to control the surgical system's arms.

The model was trained using hundreds of videos recorded from wrist cameras mounted on the arms of da Vinci robots during surgical procedures.

The team believes their model could enable a robot to learn any surgical procedure quickly, offering a much easier alternative to the traditional method of hand-coding each step to control a surgery robot's actions.

According to Krieger, this breakthrough could bring automated surgery closer to reality much sooner than expected. "What’s new here is that we only need to collect imitation learning data for different procedures, and we can train a robot to learn them in just a couple of days," he explained. "This allows us to accelerate towards full autonomy, reducing medical errors and achieving more accurate surgeries." This could be one of the most significant breakthroughs in robot-assisted surgery in recent years.

While there are automated devices currently used in complex procedures—like Corindus's CorPath system for cardiovascular surgeries—these typically assist with only specific steps. Krieger also emphasized the slow pace of hand-coding each robotic step. "Someone might spend a decade modeling suturing," he noted, "and that's just for one type of surgery."

Previously, Krieger’s team developed the Smart Tissue Autonomous Robot (STAR) at JHU in 2022. Guided by a three-dimensional endoscope and a machine learning tracking algorithm, STAR successfully sutured the ends of a pig's intestine without human intervention.

Now, the JHU researchers are working on training a robot to perform an entire surgery using their imitation learning method. While it will likely take years before robots can fully replace surgeons, innovations like these could make complex treatments safer and more accessible to patients worldwide.

Source: Johns Hopkins University

Cite this article:

Janani R (2024), Robot Masters Surgical Tasks Simply by Watching Videos, AnaTechMaz, pp. 99