Instructing A Robot on Its Boundaries to Perform Open-Ended Tasks Safely

The "PRoC3S" method assists a large language model (LLM) in developing a feasible action plan by testing each step through simulation. This approach could eventually help in-home robots tackle more ambiguous household tasks.

When someone tells you to "know your limits," they're typically advising moderation, like balancing exercise. But for a robot, this advice takes on a different meaning—it refers to learning the boundaries and limitations of a task within its environment, ensuring that it can perform chores safely and effectively.

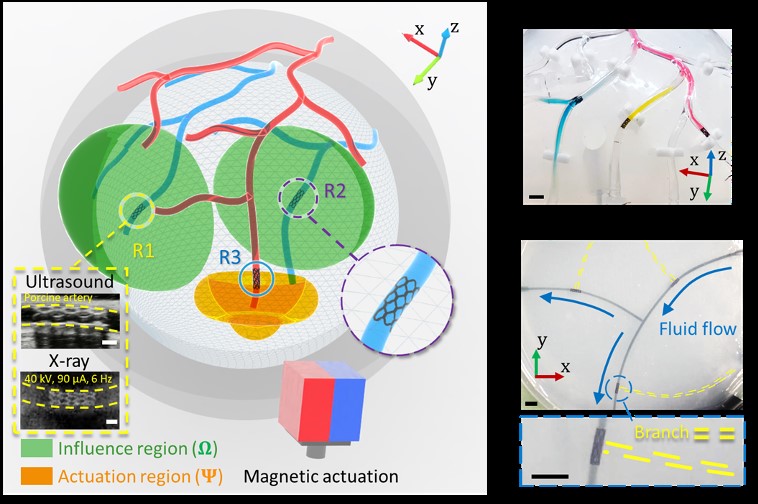

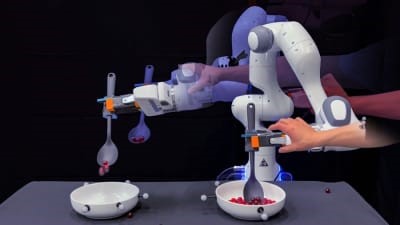

Figure 1. Teaching Robots Their Limits for Safe, Open-Ended Tasks

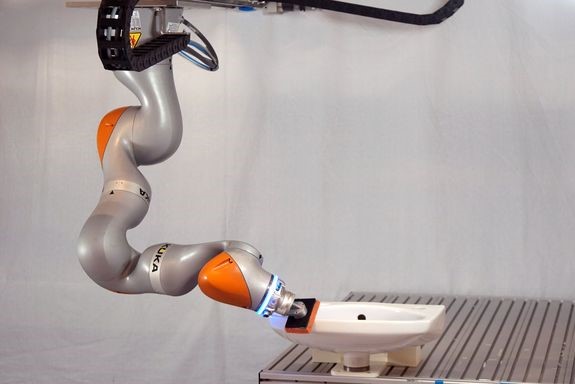

Consider a scenario where you ask a robot to clean your kitchen, but the robot doesn’t understand the physical dynamics of its environment. How can it develop a practical, multistep plan to ensure the room is spotless? While large language models (LLMs) can bring it close, they primarily rely on text data and may miss crucial physical constraints, such as the robot's reach or the need to navigate around obstacles. Relying solely on LLMs might result in more mess, like spreading pasta stains across your floor. Figure 1 shows Teaching Robots Their Limits for Safe, Open-Ended Tasks.

To help robots perform open-ended tasks, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a method that combines vision models to understand the robot's surroundings and assess its limitations. Their approach involves a large language model (LLM) creating a plan for the robot, which is then tested in a simulator to verify its feasibility and safety. If the initial plan is not executable, the LLM revises it, continuing the process until a viable action plan is formed.

This trial-and-error process, named "Planning for Robots via Code for Continuous Constraint Satisfaction" (PRoC3S), ensures that long-term plans satisfy all constraints and helps robots carry out tasks like writing letters, drawing shapes, or sorting and placing blocks. Looking ahead, PRoC3S could enable robots to perform more complex chores in dynamic environments such as homes, where tasks like "make me breakfast" involve a series of steps that need to be executed safely and effectively.

“LLMs and traditional robotics systems, such as task and motion planners, can’t handle these kinds of tasks alone, but together, they enable open-ended problem-solving,” says PhD student Nishanth Kumar SM ’24, co-lead author of a new paper on PRoC3S. “By simulating the robot’s environment in real-time and testing multiple action plans, we use vision models to create a realistic digital representation, allowing the robot to evaluate the feasibility of each action step in a long-term plan.”

The team’s research was presented at the Conference on Robot Learning (CoRL) in Munich, Germany, earlier this month.

The researchers at MIT's CSAIL used a pre-trained LLM to generate task plans for robots, starting with a simple task (like drawing a square) related to the target task (drawing a star). In simulations, PRoC3S successfully completed tasks like drawing stars, stacking blocks, and placing items with accuracy, outperforming other methods like “LLM3” and “Code as Policies.” The approach was also tested in the real world, with a robotic arm executing tasks like placing blocks in lines and matching colored blocks. This success suggests that LLMs can generate safer, reliable plans for robots, potentially enabling them to complete more complex chores in dynamic environments, like bringing you a snack.

For future work, the researchers plan to enhance their results using a more advanced physics simulator and scale up to more complex tasks with improved data-search techniques. They also aim to apply PRoC3S to mobile robots, like quadrupeds, for tasks such as walking and scanning their surroundings.

Eric Rosen, an AI Institute researcher not involved in the project, highlights that while using foundation models like ChatGPT for controlling robots can lead to unsafe actions, PRoC3S addresses this by combining high-level guidance from foundation models with AI techniques that explicitly reason about the world, ensuring safe and reliable actions. This approach, combining planning and data-driven methods, may be crucial in developing robots capable of performing a wider range of tasks.

The work, supported by various organizations including the NSF and MIT Quest for Intelligence, was led by Kumar and Curtis, with co-authors Jing Cao, Leslie Pack Kaelbling, and Tomás Lozano-Pérez from CSAIL.

Source: MIT News

Cite this article:

Janani R (2024), Instructing A Robot on Its Boundaries to Perform Open-Ended Tasks Safely, AnaTechMaz, pp. 100