Artificial Intelligence Won't Result in Human Extinction

Yes, the fast-growing technology could be dangerous—but A.I. doomers are focused on the wrong threat.

Geoffrey Hinton is a legendary computer scientist whose work laid the foundation for today’s artificial intelligence technology. He was a co-author of two of the most influential A.I

papers: a 1986 paper describing a foundational technique (called backpropagation) that is still used to train deep neural networks and a 2012 paper demonstrating that deep neural networks could be shockingly good at recognizing images.

Figure.1 Artificial Intelligence Won't Result in Human Extinction

Artificial Intelligence Won't Result in Human Extinction is shown in figure 1. That 2012 paper helped to spark the deep learning boom of the last decade. Google hired the paper’s authors in 2013 and Hinton has been helping Google develop its A.I. technology ever since then. But last week Hinton quit Google so he could speak freely about his fears that A.I. systems would soon become smarter than us and gain the power to enslave or kill us.

“There are very few examples of a more intelligent thing being controlled by a less intelligent thing,” Hinton said in an interview on CNN last week.[1]

The transformation of a 4D printed object can be triggered by various external factors such as temperature changes, exposure to water or light, or even magnetic fields. This allows 4D printed objects to have unique applications in various fields such as medicine, aerospace, and architecture.

This is not the first time humanity has stared down the possibility of extinction due to its technological creations. But the threat of AI is very different from the nuclear weapons we've learned to live with. Nukes can't think. They can't lie, deceive or manipulate. They can't plan and execute. Somebody has to push the big red button.

Why would a superintelligent AI kill us all?

Are these machines not designed and trained to serve and respect us? Sure they are. But nobody sat down and wrote the code for GPT-4; it simply wouldn't be possible. OpenAI instead created a neural learning structure inspired by the way the human brain connects concepts. It worked with Microsoft Azure to build the hardware to run it, then fed it billions and billions of bits of human text and let GPT effectively program itself.

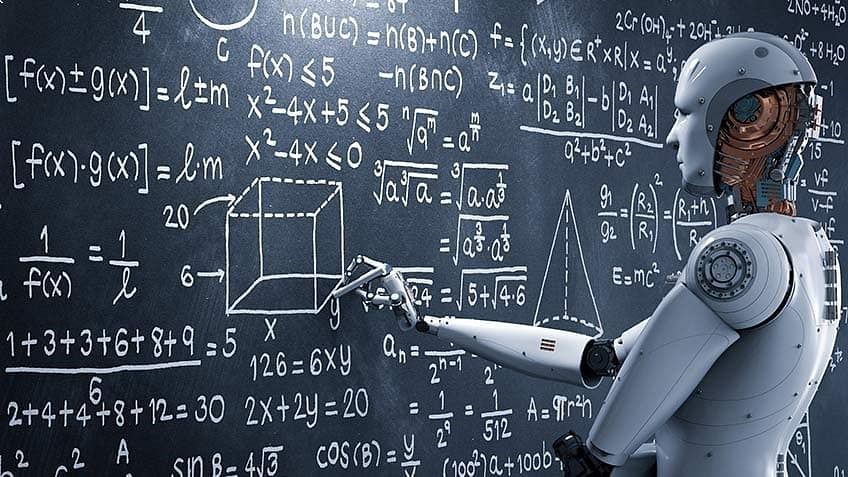

The resulting code doesn't look like anything a programmer would write. It's mainly a colossal matrix of decimal numbers, each representing the weight, or importance, of a particular connection between two "tokens." Tokens, as used in GPT, don't represent anything as useful as concepts, or even whole words. They're little strings of letters, numbers, punctuation marks and/or other characters.

No human alive can look at these matrices and make any sense out of them. The top minds at OpenAI have no idea what a given number in GPT-4's matrix means, or how to go into those tables and find the concept of xenocide, let alone tell GPT that it's naughty to kill people. You can't type in Asimov's three laws of robotics, and hard-code them in like Robocop's prime directives. The best you can do is ask nicely.[2]

Some of the potential applications of 4D printing include self-assembling structures, medical implants that can adapt to the body's changing needs, and self-repairing materials.

However, the technology is still in its early stages, and much research is being done to explore its full potential.

References:

- https://slate.com/technology/2023/05/artificial-intelligence-existential-threat-google-geoffrey-hinton.html

- https://newatlas.com/technology/ai-danger-kill-everyone/

Cite this article:

Gokula Nandhini K (2023), Artificial Intelligence Won't Result in Human Extinction AnaTechMaz, pp.303