Scalability and High Availability

Kubernetes allows you to scale your applications up or down based on demand, ensuring that your application can handle a high volume of traffic. It also provides high availability by automatically restarting containers that fail or are unhealthy.

Most providers of real-time communications align with service levels that provide availability from 99.9% to 99.999%. Depending on the degree of high availability (HA) that you want, you must take increasingly sophisticated measures along the full lifecycle of the application.

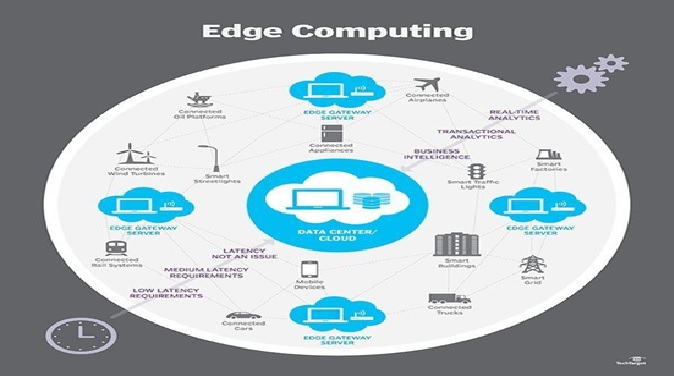

Figure .1 Scalability and high availability

Figure 1 shows Scalability and high availability are two critical aspects of modern application deployment.

High availability is a method that groups servers that support applications or services that can be reliably used with a minimum amount of downtime. Scalability means adding more instances. Horizontal scalability, which is also known as scale-out, can be performed by adding more hardware resources like physical or virtual servers. Vertical Scalability, also known as scale-up, can be performed by getting more powerful hardware that can either outperform the replaced one and/or run more services.

Here's an overview of these concepts:

- Scalability: Scalability refers to the ability of an application to handle an increasing amount of traffic or workload. As an application grows, it may require additional resources to maintain performance and availability. Scaling can be achieved through vertical scaling, which involves adding more resources to an existing server, or horizontal scaling, which involves adding more servers to a system. Horizontal scaling is typically achieved through the use of load balancers and distributed systems.

- High availability :High availability refers to the ability of an application to remain operational even in the event of failures. This is typically achieved through redundancy, which involves having multiple copies of an application running simultaneously. If one instance fails, traffic can be automatically routed to another instance to maintain availability. High availability can be achieved through the use of load balancers, failover mechanisms, and distributed systems.

- Load balancers:Load balancers distribute traffic across multiple servers to ensure that no single server becomes overloaded. They also provide failover mechanisms to ensure high availability in the event of server failures.

- Distributed systems :Distributed systems allow an application to be deployed across multiple servers or data centers, providing redundancy and scalability. They typically involve the use of message queues, distributed databases, and other technologies to ensure that data remains consistent across all instances.

Overall, scalability and high availability are essential components of modern application deployment, ensuring that applications can handle increasing amounts of traffic and remain operational in the event of failures. These concepts are often achieved through the use of load balancers, distributed systems, and other technologies.

References:

- https://docs.aws.amazon.com/whitepapers/latest/real-time-communication-on-aws/high-availability-and-scalability-on-aws.html

- https://doc.owncloud.com/ocis/next/availability_scaling/availability_scaling.html

Cite this article:

Janani R (2023),Scalability and high availability, AnaTechMaz, pp.71