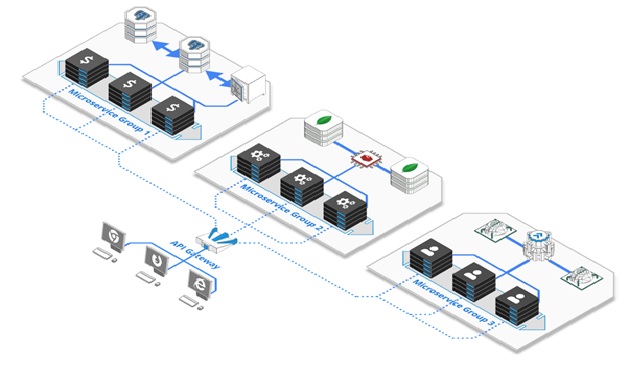

The Service Meshes in a Microservices Architecture

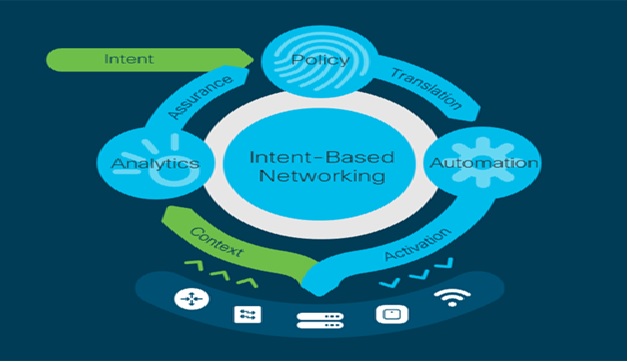

A service mesh is a configurable, low latency infrastructure layer designed to handle a high volume of network based interprocess communication [1] among application infrastructure services using application.

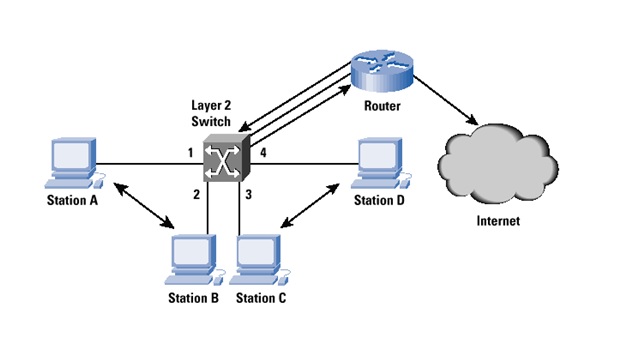

Figure 1. Microservices Architecture

programming interfaces (APIs). A service mesh ensures that communication among containerized and often ephemeral application infrastructure services is fast, reliable, and secure. The mesh provides critical figure1 shown above the paragraph capabilities including service discovery, load balancing, encryption, observability, traceability, authentication and authorization, and support for the circuit breaker pattern.

The service mesh is usually implemented by providing a proxy instance, called a sidecar, for each service instance. Sidecars handle interservice communications, monitoring, and security related concerns – indeed, anything that [2] can be abstracted away from the individual services. This way, developers can handle development, support, and maintenance for the application code in the services; operations teams can maintain the service mesh and run the app.

A service mesh doesn’t introduce new functionality to an app’s runtime environment—apps in any architecture have always needed rules to specify how requests get from point A to point B.

What’s different about a service mesh is that it takes the logic governing service-to-service communication out of individual services and abstracts it to a layer of infrastructure.

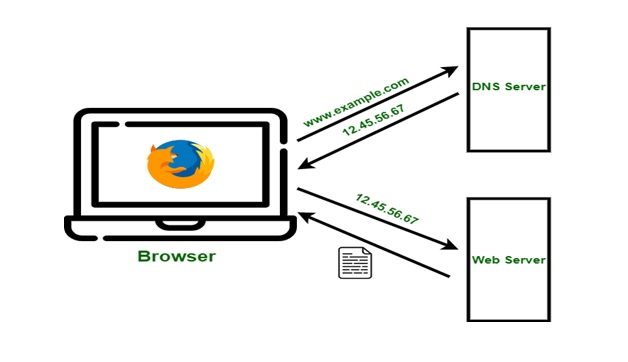

- As your request for this page went out, it was first received by your company’s web proxy.

- After passing the proxy’s security measure, it was sent to the server that hosts this page.

- Next, this page was returned to the proxy and again checked against its security measures.

- And then it was finally sent from the proxy to you.

Companies are increasingly adopting microservices, containers, and Kubernetes. The need to modernize, and the need to increase developer [3] productivity, application agility, and scalability drives this increase. Many organizations are also venturing into cloud computing and adopting a distributed microservice architecture for both new and existing applications and services.

Multi-tenancy

The multi-tenancy deployment pattern isolates groups of microservices from each other when a tenant exclusively uses those groups. A typical use case for multi-tenancy is to isolate the services between two different departments within an organization or to isolate entire organizations altogether. The goal is that each tenant has its own dedicated services. Those services can't access the services of other tenants at all, or can only access other tenants' services when authorized.

Namespace tenancy

The namespace tenancy form provides each tenant with a dedicated namespace within a cluster. Because each cluster can support multiple tenants, Namespace tenancy maximizes infrastructure sharing.

To restrict communication between the services of different tenants, expose only a subset of the services outside the namespace (using a sidecar configuration) and use service-mesh-authorization policies to control the exposed services.

Cluster tenancy

The cluster tenancy form exclusively dedicates the entire cluster, including all namespaces, to a tenant. A tenant can also have more than one cluster. Each cluster has its own mesh.

References:

- https://www.nginx.com/blog/what-is-a-service-mesh/

- https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh

- https://cloud.google.com/architecture/service-meshes-in-microservices-architecture

Cite this article:

Nandhinidwaraka. S (2021), The Service Meshes in a Microservices Architecture, AnaTechMaz, pp. 55