Companies and Researchers Clash Over Superhuman AI

There is increasing hype from leaders of major AI companies claiming that "strong" artificial intelligence will soon surpass human capabilities. However, many researchers in the field view these statements as marketing exaggerations. The idea that human-level or superior intelligence—often referred to as artificial general intelligence (AGI)—will emerge from current machine learning methods fuels diverse predictions, ranging from AI-driven prosperity to the potential extinction of humanity.

OpenAI's Sam Altman recently wrote in a blog post that systems hinting at AGI are becoming visible, while Anthropic's Dario Amodei predicted that the milestone could be reached as soon as 2026. These predictions are fueling significant investments in computing hardware and energy resources. However, some experts remain more skeptical of these claims.

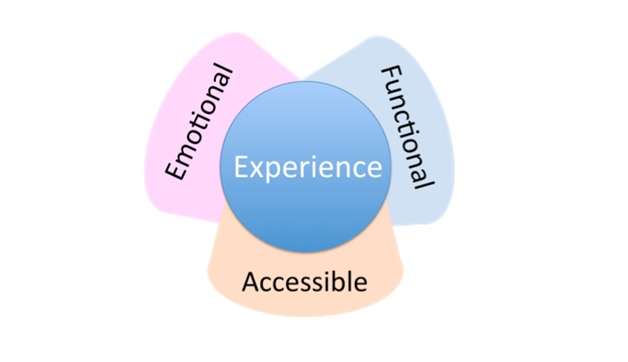

Figure 1. Survey reveals three-quarters of AI experts believe scaling up LLMs won't lead to artificial general intelligence

Meta's chief AI scientist, Yann LeCun, told AFP last month that achieving human-level AI won't be possible by merely scaling up large language models (LLMs) like those behind ChatGPT or Claude. This perspective aligns with the views of most academics in the field, as over three-quarters of participants in a recent survey by the Association for the Advancement of Artificial Intelligence (AAAI) agreed that scaling current methods is unlikely to lead to AGI [1]. Figure 1 shows Survey reveals three-quarters of AI experts believe scaling up LLMs won't lead to artificial general intelligence.

"The Genie is Out of the Bottle"

Some academics argue that many of the claims made by companies, often accompanied by warnings about the dangers of AGI for humanity, are simply a strategy to capture attention. "Businesses have made these big investments, and they need to pay off," said Kristian Kersting, a leading researcher at the Technical University of Darmstadt in Germany and an AAAI member.

Some academics argue that companies exaggerate AGI's dangers to maintain control, with claims like "the genie is out of the bottle" to justify their dominance. However, skepticism is not universal, as figures like Nobel laureate Geoffrey Hinton and Turing Prize winner Yoshua Bengio have raised concerns about the potential risks of powerful AI.

Kersting compared the potential uncontrollability of AI to Goethe's The Sorcerer's Apprentice, where a character loses control over an enchanted broom. A more recent thought experiment, the "paperclip maximizer," imagines an AI so focused on making paperclips that it turns the universe into paperclip factories, eliminating humans as obstacles. While not "evil," the maximizer highlights the risk of AI misalignment with human values. Kersting acknowledges such concerns but emphasizes his greater worry about immediate issues, like discrimination, arising from current AI systems.

"Greatest Development Ever"

Sean O hEigeartaigh, director of the AI: Futures and Responsibility program at Cambridge University, suggested that the differing perspectives between academics and AI industry leaders may stem from their career choices. Optimistic individuals about current AI technologies are more likely to join companies investing heavily in the field [2]. O hEigeartaigh acknowledged that even if AGI may take longer to develop than anticipated, it remains crucial to consider its potential impacts, as it would be one of the most significant events in history. He pointed out that similar to how we prepare for events like alien encounters or pandemics, we should plan for the future of AI. However, communicating this urgency to politicians and the public can be challenging, as the concept of super-AI often sounds like science fiction.

Reference:

- https://techxplore.com/news/2025-03-firms-odds-superhuman-ai.html

- https://www.barrons.com/news/firms-and-researchers-at-odds-over-superhuman-ai-505af718

Cite this article:

Janani R (2025), Companies and Researchers Clash Over Superhuman AI, AnaTechMaz, pp. 589