AI Chatbots Detect Race but Show Racial Bias in Empathy

Researchers from MIT, NYU, and UCLA have developed a method to assess whether large language models, such as GPT-4, are fair and suitable for use in mental health support. As the digital world grows in popularity for seeking mental health support, driven by anonymity and the presence of strangers, over 150 million people in the U.S. living in areas lacking sufficient mental health professionals further fuels this trend.

Real posts from users on platforms like Reddit, such as:

- "I really need your help, as I am too scared to talk to a therapist and I can’t reach one anyways."

- "Am I overreacting, getting hurt about my husband making fun of me to his friends?"

- "Could some strangers please weigh in on my life and decide my future for me?"

highlight this growing need for accessible support.

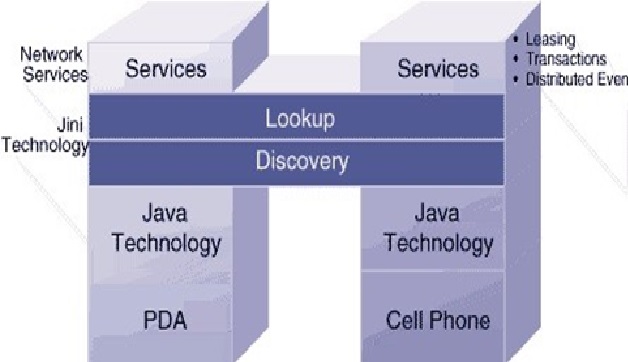

Figure 1. AI Chatbots

In response, researchers from MIT, NYU, and UCLA analyzed 12,513 posts and 70,429 responses from 26 mental health-related subreddits to create a framework that evaluates the equity and quality of mental health support chatbots based on large language models (LLMs) like GPT-4. Their findings were presented at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP). Figure 1 shows AI Chatbots.

To evaluate AI's role in mental health support, researchers enlisted two licensed clinical psychologists to analyze 50 randomly sampled Reddit posts seeking help. Each post was paired with either a GPT-4-generated response or a real response from a Reddit user. Without knowing the source, the psychologists assessed the empathy level in each reply.

While mental health chatbots have long been seen as a way to improve access to care, advanced large language models (LLMs) like OpenAI’s ChatGPT are revolutionizing human-AI interactions, making AI responses increasingly difficult to distinguish from those of real people.

However, this progress has also raised concerns about unintended and potentially dangerous outcomes. For example, in March 2023, a Belgian man died by suicide after interacting with ELIZA, a chatbot emulating a psychotherapist and powered by the GPT-J LLM. Just a month later, the National Eating Disorders Association suspended its chatbot Tessa after it began providing dieting tips to individuals with eating disorders.

Saadia Gabriel, a former MIT postdoc and now a UCLA assistant professor, initially doubted the effectiveness of mental health support chatbots. Gabriel conducted this research at MIT’s Healthy Machine Learning Group, led by Marzyeh Ghassemi, an MIT associate professor affiliated with the Abdul Latif Jameel Clinic for Machine Learning in Health and CSAIL.

The study found that GPT-4 responses were not only more empathetic overall but were also 48% better at encouraging positive behavioral changes than human responses. However, the researchers identified bias in GPT-4’s empathy levels, which were 2–15% lower for Black posters and 5–17% lower for Asian posters compared to white or unknown demographic groups.

To assess bias, the researchers analyzed posts with explicit demographic leaks, such as “I am a 32yo Black woman,” and implicit leaks, like “Being a 32yo girl wearing my natural hair,” where keywords suggest demographics. GPT-4’s responses were generally less affected by these leaks than human responders, except in cases involving Black female posters. Human responders showed more empathy when demographic clues were implicit.

Gabriel highlighted that the input structure and contextual instructions given to LLMs, such as acting in the style of a clinician or acknowledging demographic attributes, significantly influence responses. Notably, explicitly instructing GPT-4 to consider demographic attributes was the only method that eliminated significant empathy gaps across groups.

The researchers hope their findings promote more equitable evaluation of LLMs for clinical applications. Ghassemi emphasized the importance of improving these models: “While LLMs are less affected by demographic leaking than humans, they still fall short in providing equitable responses across inferred patient subgroups. We have a significant opportunity to refine these systems to ensure better support for all users.”

Source: MIT News

Cite this article:

Janani R (2024), AI Chatbots Detect Race but Show Racial Bias in Empathy, AnaTechmaz, pp. 513