Here's Why Artificial Intelligence Such as ChatGPT Likely Won't Achieve Human-Like Understanding

If you were to inquire of ChatGPT whether it possesses human-like thought processes, the chatbot would clarify that it doesn't. It would explain, "I can process and comprehend language to a certain degree," but emphasize that its understanding stems from analysing patterns in data, rather than human-like comprehension.

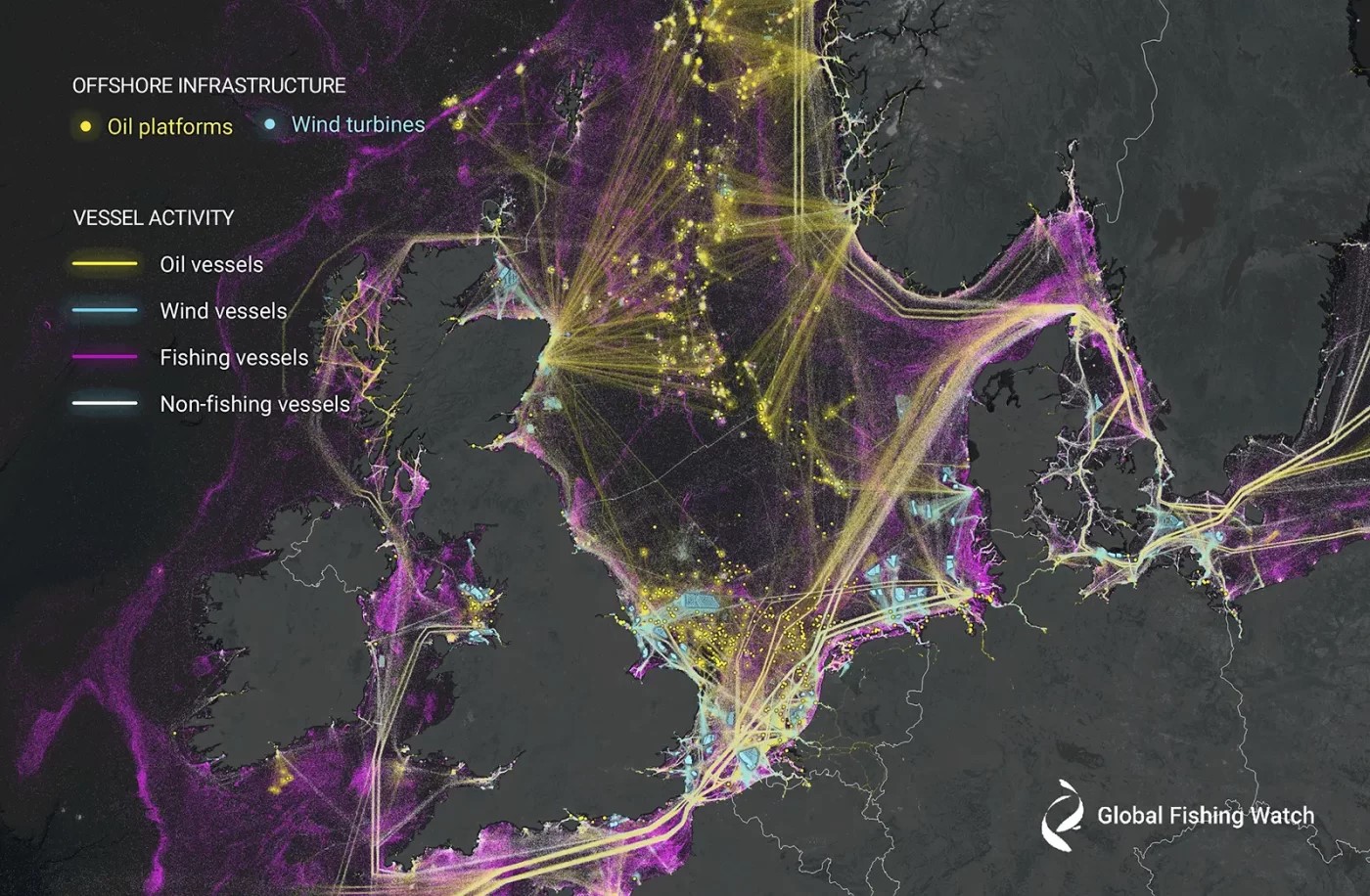

Figure 1. Limitations of Current Computer Brains: Difficulty in Applying Knowledge to Novel Situations.

Figure 1 shows limitations of Current Computer Brains: Difficulty in Applying Knowledge to Novel Situations. Nevertheless, conversing with this artificial intelligence often creates an impression akin to interacting with a human, albeit a highly skilled and intelligent one. ChatGPT readily provides answers to inquiries about mathematics or history in various languages, generates narratives and computer code upon request, and similar "generative" AI models can craft artwork and videos from scratch.[1]

"Many of these systems appear quite intelligent," noted Melanie Mitchell, a computer scientist at the Santa Fe Institute in New Mexico, during her presentation at the annual meeting of the American Association for the Advancement of Science in Denver, Colo., held in February.

The crux of the issue, as these experts contend, lies in what ChatGPT articulates. Even the most advanced AI systems today lack genuine comprehension akin to humans. Consequently, this limitation imposes constraints on their capabilities.

Historical Concerns about AI

For decades, concerns have persisted about the advancement of AI, dating back to Deep Blue's victory over Garry Kasparov in 1997. Despite AI's early limitations compared to humans, about a decade ago, deep learning revolutionized neural networks, enabling computers to excel in tasks like image recognition and speech transcription. However, deep-learning models faced challenges such as susceptibility to deception and lack of adaptability to new tasks. Now, a new era of generative AI is emerging, surpassing the achievements of the previous decade's deep-learning revolution.

The era of generative AI

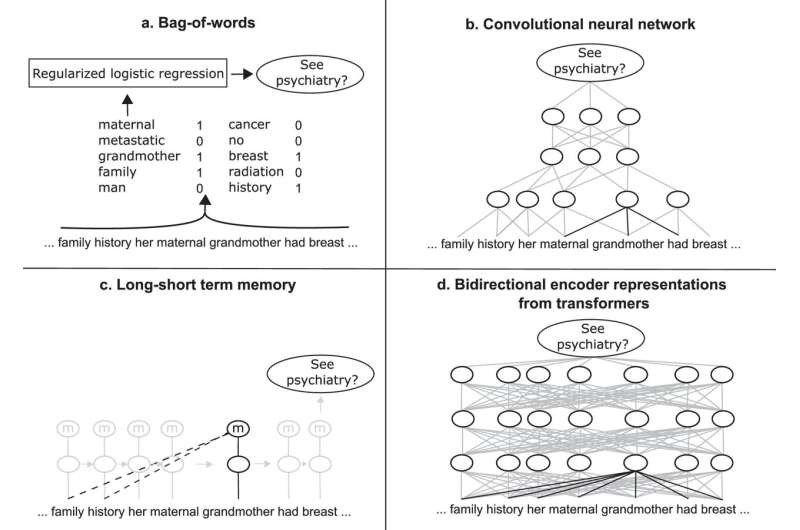

Generative AI systems, also known as LLMs (Large Language Models), have the capability to create text, images, and other content as needed. These AI models, like ChatGPT, are trained on vast amounts of data, including internet content and scanned print books. They possess a broad knowledge base, encompassing words, phrases, symbols, and mathematical equations. This enables them to perform tasks ranging from idea generation to poetry writing, showcasing abilities that were previously considered exclusive to human creativity.

LLMs (Large Language Models) are adept at predicting the order in which words should appear by learning patterns in language structure. This enables them to generate coherent sentences, answer questions, mimic the writing style of different authors, and solve riddles. While some researchers argue that these capabilities suggest LLMs understand their tasks and could potentially reason or even develop consciousness, experts like Mitchell maintain that LLMs do not truly comprehend the world, at least not in the same way humans do.[2]

Limited Scope AI

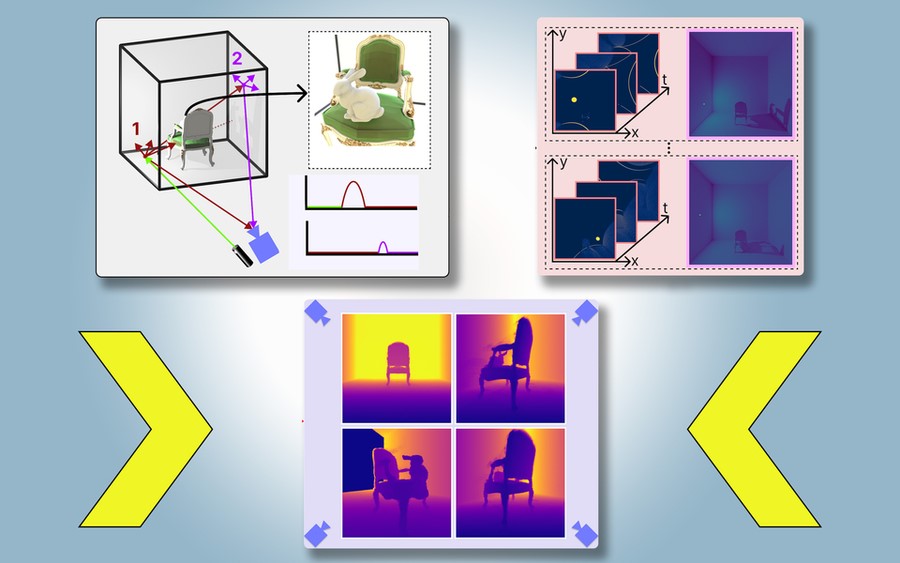

Mitchell and Martha Lewis, from the University of Bristol, highlighted a significant limitation of LLMs in their recent study shared on arXiv.org. Their research showed that LLMs still lag behind humans in adapting skills to new situations. They demonstrated this with a letter-string problem, where humans outperformed LLMs in discerning patterns and predicting the next sequence. While LLMs are proficient with the English alphabet, they struggle to match human adaptability in similar tasks.

Mitchell and Lewis conducted further experiments demonstrating that LLMs struggle with letter-string problems when presented with alternative alphabets or symbols instead of letters. Unlike humans, LLMs typically fail to adapt concepts learned with one alphabet to another. This inability to generalize knowledge to new situations leads Mitchell to doubt whether LLMs truly comprehend the world as humans do.

The Significance of Comprehension

Mitchell, speaking at the AAAS meeting, emphasized the core aspect of understanding: reliability and adaptability in new situations. Human understanding, she explained, relies on mental concepts that enable us to grasp categories, cause-and-effect relationships, and predict outcomes, even in unfamiliar scenarios. Mitchell acknowledges the potential for AI to achieve a level of understanding akin to humans but suggests that machine understanding may differ fundamentally. She doubts that LLMs, which learn language before abstract concepts unlike humans, will be the key to such understanding. While conversing with ChatGPT might resemble human interaction, the underlying computational process remains distinct from human cognition.

Reference:

- https://www.snexplores.org/article/ai-limits-chatbot-artificial-intelligence

- https://www.abc.net.au/religion/why-artificial-intelligence-cannot-achieve-understanding/102288234

Cite this article:

Gokila G (2024), Here's Why Artificial Intelligence Such as ChatGPT Likely Won't Achieve Human-Like Understanding, AnaTechMaz, pp. 400