Why We Must Open the Black Box of AI

Black box AI is any artificial intelligence system whose inputs and operations aren't visible to the user or another interested party. A black box, in a general sense, is an impenetrable system.

Black box AI models arrive at conclusions or decisions without providing any explanations as to how they were reached. In black box models, deep networks of artificial neurons disperse data and decision-making across tens of thousands of neurons, resulting in a complexity that may be just as difficult to understand as that of the human brain. In short, the internal mechanisms and contributing factors of block box AI remain unknown.[1]

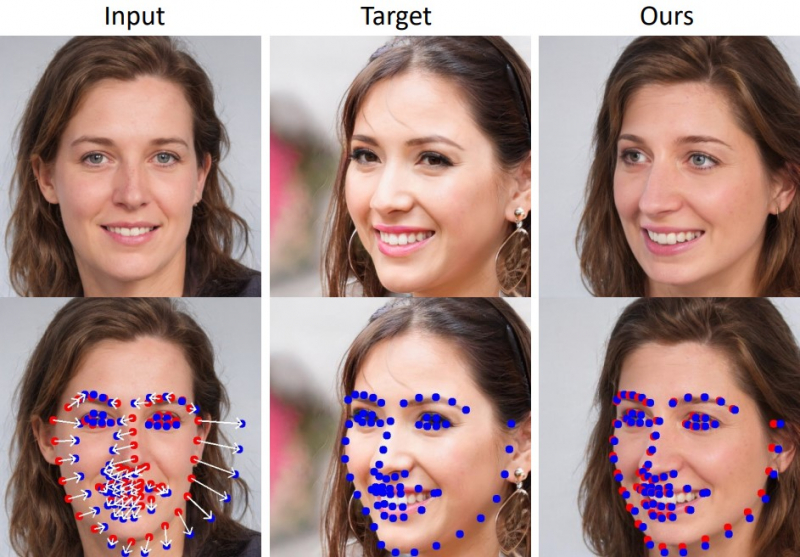

Figure 1. Black Box of AI

Black Box of AI is shown in figure 1. Understanding the inner workings of artificial intelligence (AI) systems is becoming increasingly important as AI becomes more prevalent in our daily lives. Here are a few reasons why we need to see inside AI's black box:

- Transparency and Accountability

- Bias Detection and Mitigation

- Trust and User Acceptance

- Debugging and Improvement

- Education and Advancement

Any of the three components of a machine-learning system can be hidden, or in a black box. As is often the case, the algorithm is publicly known, which makes putting it in a black box less effective. So to protect their intellectual property, AI developers often put the model in a black box. Another approach software developers take is to obscure the data used to train the model – in other words, put the training data in a black box.

The opposite of a black box is sometimes referred to as a glass box. An AI glass box is a system whose algorithms, training data and model are all available for anyone to see. But researchers sometimes characterize aspects of even these as black box.[2]

One of the challenges of using artificial intelligence solutions in the enterprise is that the technology operates in what is commonly referred to as a black box. Often, artificial intelligence (AI) applications employ neural networks that produce results using algorithms with a complexity level that only computers can make sense of. In other instances, AI vendors will not reveal how their AI works. In either case, when conventional AI produces a decision, human end users don’t know how it arrived at its conclusions.

This black box can pose a significant obstacle. Even though a computer is processing the information, and the computer is making a recommendation, the computer does not have the final say. That responsibility falls on a human decision maker, and this person is held responsible for any negative consequences. In many current use cases of AI, this isn’t a major concern, as the potential fallout from a “wrong” decision is likely very low.[3]

However, it's important to note that there are challenges in opening up the black box of AI, including concerns about intellectual property, trade secrets, and potential security risks. Striking a balance between transparency and protecting sensitive information is an ongoing discussion within the AI community.

References:

- https://www.techtarget.com/whatis/definition/black-box-AI

- https://www.scientificamerican.com/article/why-we-need-to-see-inside-ais-black-box

- https://www.forbes.com/sites/forbestechcouncil/2019/02/22/explainable-ai-why-we-need-to-open-the-black-box

Cite this article:

Gokula Nandhini K (2023), Why We Must Open the Black Box of AI, AnaTechMaz, pp.265