Does AI Need a Body to Achieve Human-Like Intelligence?

The first robot I remember is Rosie from The Jetsons, quickly followed by the sophisticated C-3PO and his loyal companion R2-D2 in The Empire Strikes Back. But the first AI I encountered without a physical form was Joshua, the computer in WarGames that nearly triggered a nuclear war—until it grasped the concept of mutually assured destruction and decided to play chess instead.

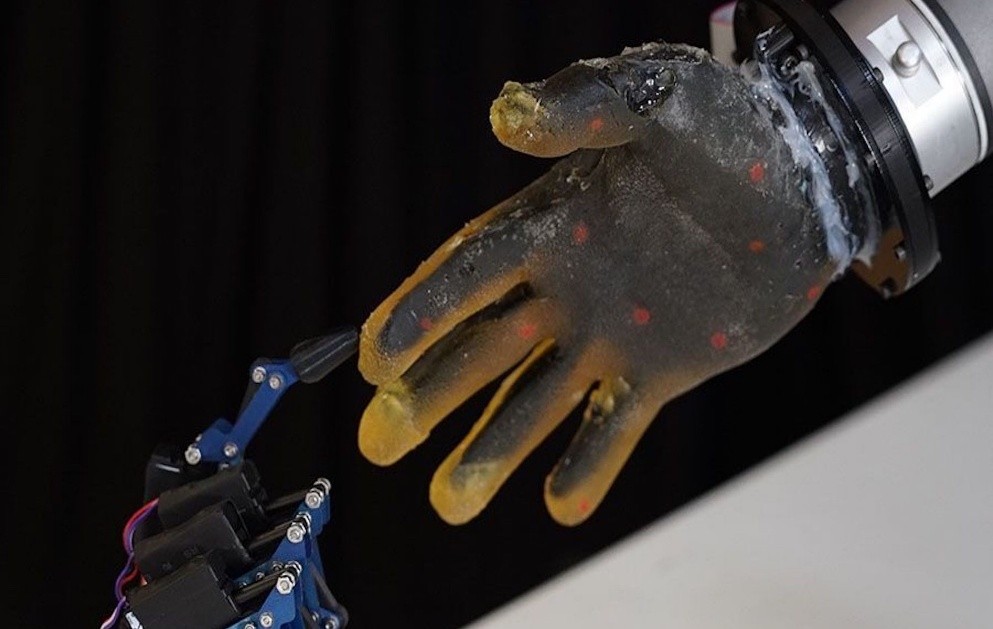

Figure 1. Does AI Require a Body to Reach Human-Level Intelligence?

At age seven, this changed everything for me. Could a machine truly grasp ethics? Emotion? What it means to be human? I began to wonder—did artificial intelligence need a body to understand any of it? My fascination only grew as portrayals of non-human intelligence became more complex, from the android Bishop in Aliens and Data in Star Trek: The Next Generation, to more recent figures like Samantha in Her and Ava in Ex Machina. Figure 1 shows Does AI Require a Body to Reach Human-Level Intelligence?

The Limits of Disembodied AI

Recent research is starting to reveal the shortcomings of today’s most advanced—yet fundamentally disembodied—AI systems. A new study from Apple looked at so-called “Large Reasoning Models” (LRMs), which are designed to generate intermediate reasoning steps before producing an answer. While these models outperform standard large language models (LLMs) on many tasks, they tend to break down when faced with more complex problems. Alarmingly, they don’t just plateau—they collapse, even when given ample computing resources.

Even more concerning is their inconsistency. These models often fail to reason in a stable, algorithmic way. Their “reasoning traces”—the step-by-step logic behind their answers—lack coherence. And as the complexity of a task increases, the models seem to put in less cognitive effort, not more. The authors ultimately conclude that these systems don’t “think” in any way that resembles human reasoning.

“What we are building now are things that take in words and predict the next most likely word... That’s very different from what you and I do,” Nick Frosst, former Google researcher and co-founder of Cohere, told The New York Times.

Cognition Is More Than Just Computation

So how did we get here? For much of the 20th century, artificial intelligence was guided by a framework known as GOFAI—Good Old-Fashioned Artificial Intelligence.” It viewed cognition as a system of symbolic logic, where intelligence was treated like code: abstract symbols processed by machines. In this view, the mind didn’t need a body; thinking was seen as a purely computational act.

But cracks began to show when early robot AIs struggled with the unpredictability of the real world. This led researchers in psychology, neuroscience, and philosophy to rethink the foundations of intelligence. Inspired by studies of animals, plants, and other living systems, a new perspective emerged—one grounded in adaptation, learning, and environmental responsiveness. These forms of intelligence develop not through abstract reasoning, but through direct physical interaction with the world.

Even in humans, cognition is deeply embodied. The enteric nervous system—often called our “second brain”—regulates digestion using the same types of neurons and neurotransmitters found in the brain. Remarkably, octopus tentacles operate similarly, with individual limbs capable of sensing and reacting independently. These examples underscore a growing realization: true intelligence may be inseparable from the body that carries it.

Embodied Intelligence: A New Way of Thinking

To build truly intelligent machines, we may need to design smarter bodies alongside smarter minds—and for soft robotics pioneer Cecilia Laschi, “smarter” often means “softer.” After years of working with rigid humanoid robots in Japan, Laschi turned to soft-bodied designs, drawing inspiration from the octopus—an organism without a skeleton, whose limbs can operate with a kind of decentralized intelligence.

“If you have a human-like robot walking, you have to control each movement,” she explained in an interview with New Atlas. “If the terrain changes, you need to reprogram it slightly.”

“Our knee is compliant,” she said. “We adapt to uneven ground mechanically, without engaging the brain.”

This reflects the core idea of embodied intelligence—the notion that parts of cognition can be distributed throughout the body itself.

From an engineering standpoint, this approach offers major benefits. By shifting some of the burden of perception, control, and decision-making to the robot’s physical structure, designers can reduce the demands on its central processor. The result is a more adaptive, resilient machine—better suited to navigate the unpredictable complexities of the real world.

In other words, behavior emerges from physical interaction with the environment, not just lines of code. Intelligence, then, isn’t something you simply program—it’s something learned through lived experience.

This shifts the focus from faster processors and larger models to something more dynamic: interaction. That’s where soft robotics comes in. By using flexible materials like silicone and smart fabrics, soft robots gain bodies that are fluid, adaptive, and capable of learning in real time. A soft robotic arm, for example—like the limb of an octopus—can grasp, sense, and respond without needing to compute every movement step-by-step.

Flesh and Feedback: Can Materials Think for Themselves?

To achieve this kind of autonomy, engineers must move beyond traditional programming. Instead of scripting every possible action, the goal is to design materials and structures that can sense, react, and adapt on their own.

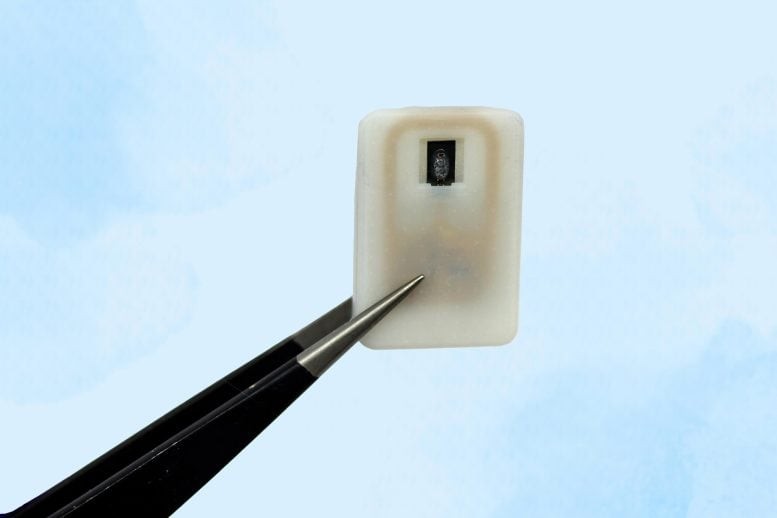

At the forefront is Ximin He, Associate Professor of Materials Science and Engineering at UCLA. Her lab develops soft materials—such as responsive gels and polymers—that don’t just react to stimuli but actively regulate their behavior using built-in feedback mechanisms.

“We’ve been working on creating more decision-making ability at the material level,” he told New Atlas. “Materials that change shape in response to a stimulus can also ‘decide’ how to modulate that stimulus based on how they deform—correcting or adjusting their next motion.”

Back in 2018, her team demonstrated a gel that could self-regulate its movement. Since then, they’ve expanded this concept to a wide range of soft materials, including liquid crystal elastomers that function effectively in open-air conditions.

The key to autonomous physical intelligence (API) lies in a concept called nonlinear time-lag feedback. Traditional robots operate on a loop: sensors gather data, a control system analyzes it, and then sends instructions to actuators. In contrast, Ximin He’s approach embeds this logic directly into the materials themselves.

“In robotics, you need sensing and actuation—but also decision-making between them,” He explains. “That’s what we’re embedding physically, using feedback loops.”

She draws a parallel to biology. In living systems, negative feedback mechanisms (like blood sugar regulation) stabilize behavior, while positive feedback amplifies it (as in hormone surges). Nonlinear feedback—which blends both—enables rhythmic, adaptive movement, like walking or swimming.

“A lot of natural motion—like walking or swimming—relies on periodic, steady movement,” he says. “With nonlinear, time-lagged feedback, we can design soft robots to move forward, backward, forward again—without needing external control at each step.”

This marks a significant leap from earlier soft robots that relied on external inputs to function. As He and colleagues outlined in a recent review, the integration of sensing, control, and actuation within the material itself allows for machines that not only react but also decide, adapt, and act independently.

The Future Is Soft (and Smart)

Soft robotics is still in its early stages, but its potential is enormous. Cecilia Laschi points to medical applications as among the most immediate: soft endoscopes that could both sense and respond to delicate human tissue in real time, or rehabilitation devices that adapt fluidly to a patient’s movements and needs.

In this emerging vision of robotics, intelligence doesn’t just reside in code or chips—it’s distributed throughout the machine’s form. These are not just softer robots. They’re smarter ones.

So, if we truly want to move from AI to AGI, machines may need bodies—specifically, bodies that are soft, adaptive, and capable of real-world interaction. After all, most life on Earth, including humans, learns by doing—by moving, touching, failing, and adjusting. We navigate a chaotic, unpredictable world not by logic alone, but through embodied experience. We understand what an apple is not because we read about it, but because we’ve held one, tasted it, dropped it, bruised it, cut it, squeezed it, and watched it rot.

This kind of tacit, sensory, contextual knowledge is almost impossible to convey through words or pixels alone. Language models, no matter how large, are limited by their disembodied perspective. But a robot that interacts with the physical world can bypass those limitations—and begin to develop an understanding of its environment that isn’t merely human-derived, but something new.

Imagine a soft robot equipped with non-human senses—infrared vision, low-frequency hearing, or the ability to smell disease at the molecular level. Its understanding of the world could be alien to ours, yet incredibly useful—an entirely different perspective on life on Earth.

Reference:

- https://newatlas.com/ai-humanoids/agi-embodied-intelligence-robots/

Cite this article:

Priyadharshini S (2025), Does AI Need a Body to Achieve Human-Like Intelligence?, AnaTechMaz, pp.347