New Security Protocol Protects Data from Attackers During Cloud Computing Operations

MIT researchers have developed a security protocol that uses the quantum properties of light to ensure data security during cloud-based deep-learning computations, preserving model accuracy. Deep-learning models, widely used in fields like health care and finance, rely on cloud servers for their computational power, raising privacy concerns, particularly in sensitive areas like health care.

To address these concerns, the MIT team’s protocol encodes data into laser light used in fiber optic systems, leveraging quantum mechanics to prevent attackers from copying or intercepting information without detection. The technique maintains data security without sacrificing the accuracy of deep-learning models, achieving 96 percent accuracy in tests.

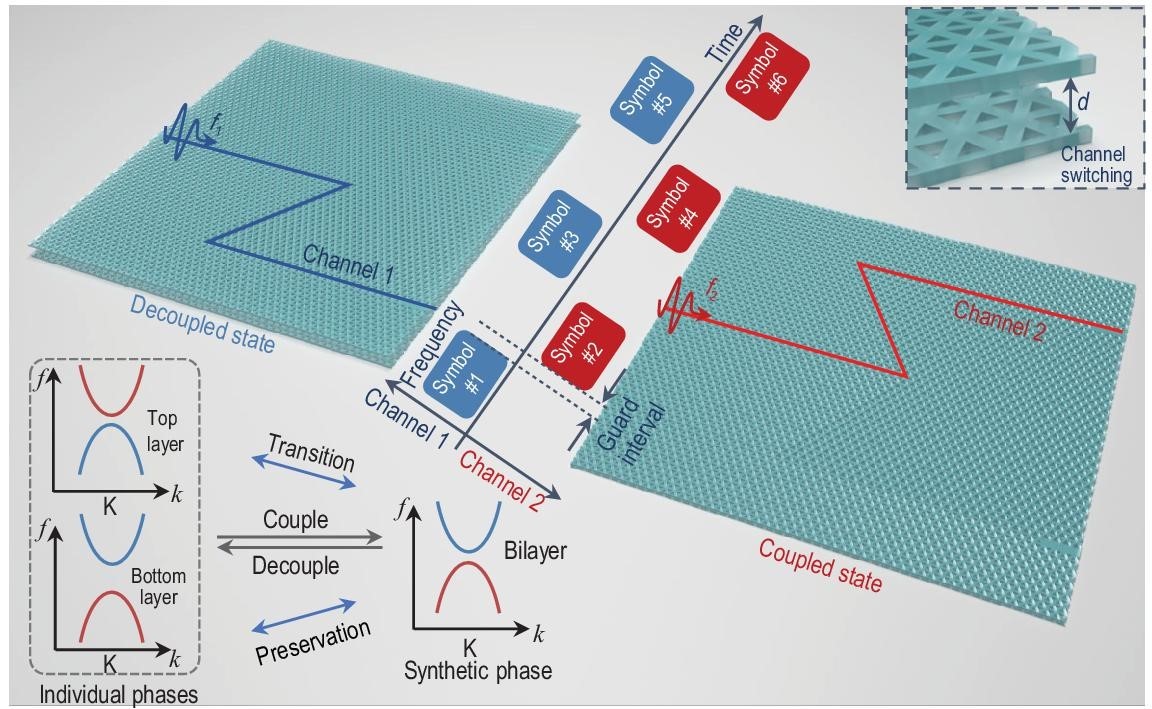

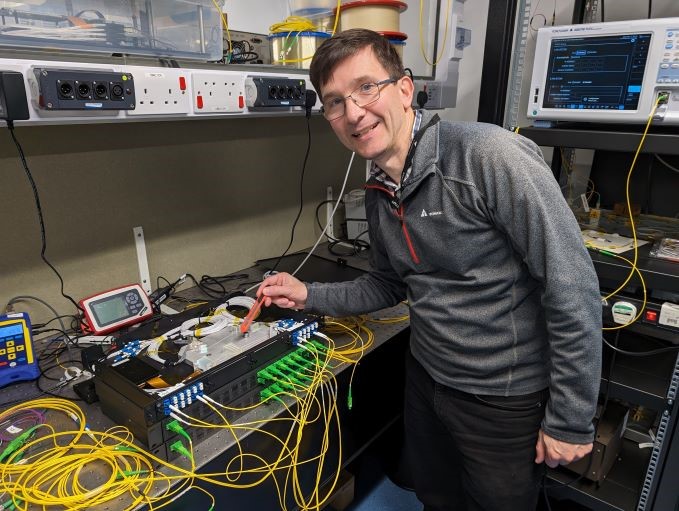

Figure 1. Enhanced Security Protocol Protects Data During Cloud Computing

"Deep learning models like GPT-4 have unprecedented capabilities but require massive computational resources. Our protocol allows users to tap into these powerful models while protecting data privacy and the proprietary nature of the models,” explains Kfir Sulimany, MIT postdoc at the Research Laboratory for Electronics (RLE) and lead author of the paper. Figure 1 shows "Enhanced Security Protocol Protects Data During Cloud Computing".

Sulimany co-authored the research with Sri Krishna Vadlamani, Ryan Hamerly, Prahlad Iyengar, and senior author Dirk Englund. The research was presented at the Annual Conference on Quantum Cryptography.

A Dual Approach to Security in Deep Learning

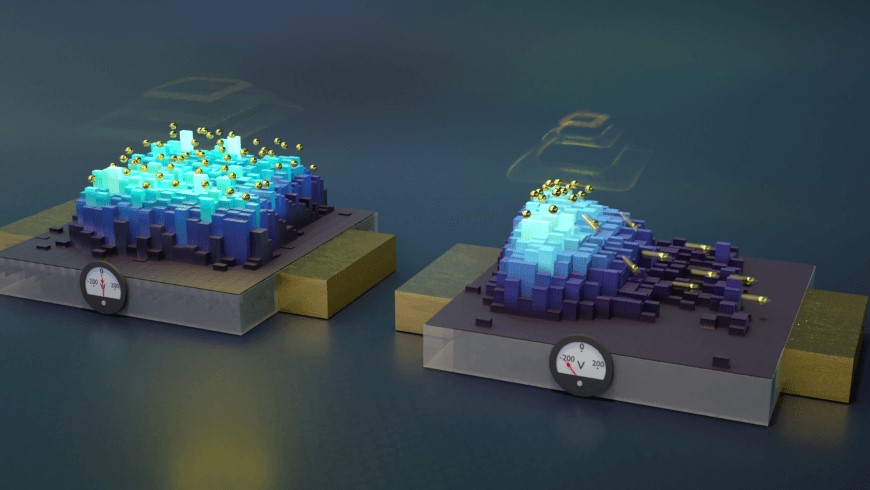

The cloud-based computation scenario examined by the researchers involves two parties: a client with confidential data, such as medical images, and a central server that controls a deep learning model. The client aims to use the model to make a prediction, like diagnosing cancer from medical images, while keeping the patient's data private.

Meanwhile, the server wants to protect its proprietary model, which may have taken years and significant investment to develop.

“Both parties have something they want to keep secret,” says Vadlamani.

In traditional digital computation, a malicious actor could easily copy data from either the client or the server. However, the researchers leverage the quantum no-cloning principle, which prevents perfect copying of quantum information, to enhance security.

In their protocol, the server encodes the weights of a deep neural network into an optical field using laser light. These weights, responsible for mathematical computations in the model, are transmitted to the client, who then processes the data without exposing it to the server. The client can only measure one result due to the quantum nature of light, preventing them from copying the model.

As the client processes the first layer of data, the protocol cancels out that layer, ensuring the client learns nothing further about the model. The residual light, containing small unavoidable errors due to quantum limitations, is sent back to the server. The server can analyze these errors to check if any information was leaked, but the client’s data remains protected.

“Rather than measuring all the incoming light from the server, the client only measures what's needed to run the deep neural network and feeds the result into the next layer, then sends the remaining light back for security verification,” explains Sulimany.

An Effective Protocol

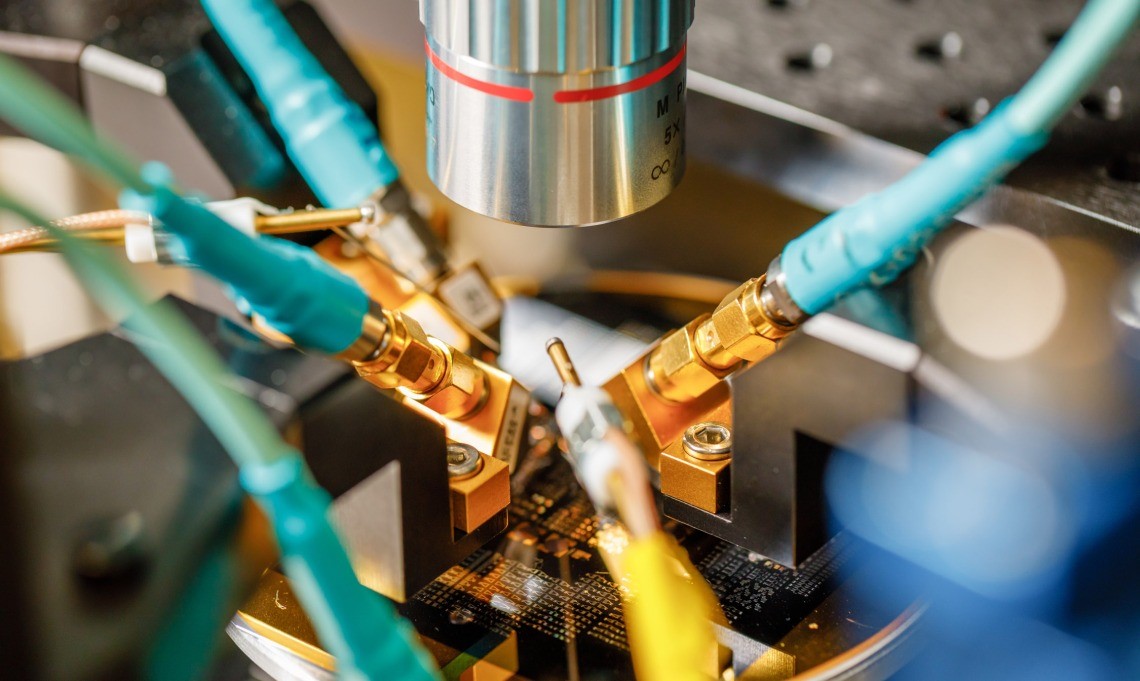

Modern telecommunications equipment typically uses optical fibers for information transfer, enabling high bandwidth over long distances. Since this equipment already incorporates optical lasers, researchers can seamlessly encode data into light for their security protocol without requiring special hardware.

In their tests, the researchers demonstrated that their approach ensures security for both the server and client while allowing the deep neural network to achieve 96 percent accuracy. The minimal information leakage about the model during client operations is less than 10 percent of what an attacker would need to uncover hidden information. Conversely, a malicious server could access only about 1 percent of the information necessary to compromise the client’s data.

“You can be assured of security in both directions—from the client to the server and vice versa,” says Sulimany.

Englund reflects on the origins of this research: “A few years ago, when we demonstrated distributed machine learning inference between MIT’s main campus and MIT Lincoln Laboratory, it occurred to me that we could innovate physical-layer security, building on years of work in quantum cryptography previously demonstrated on that testbed. However, we faced many theoretical challenges before realizing the potential for privacy-protected distributed machine learning. It wasn't until Kfir joined our team that we could develop a unified framework that addressed both the experimental and theoretical aspects.”

Looking ahead, the researchers plan to explore how this protocol can be applied to federated learning, where multiple parties collaborate to train a central deep-learning model. They also aim to investigate its use in quantum operations, which may offer enhanced accuracy and security compared to the classical operations studied in this work.

“This work cleverly combines techniques from fields that typically don’t intersect—deep learning and quantum key distribution. By incorporating methods from the latter, it adds a security layer to the former while enabling what seems to be a feasible implementation. This could be significant for maintaining privacy in distributed architectures. I’m eager to see how the protocol performs under experimental imperfections and its practical applications,” says Eleni Diamanti, a CNRS research director at Sorbonne University in Paris, who was not involved in the research.

Source: MIT News

Cite this article:

Janani R (2024), New Security Protocol Protects Data from Attackers During Cloud Computing Operations, AnaTechMaz, pp. 131