Edge Computing

One definition of edge computing is any type of computer program that delivers low latency nearer to the requests. Karim Arabi, in an IEEE DAC 2014 Keynote and subsequently in an invited talk at MIT's MTL Seminar in 2015 defined edge computing broadly as all computing outside the cloud happening at the edge of the network, and more specifically in applications where real-time processing of data is required. In his definition, cloud computing operates on big data while edge computing operates

Edge Computing as pushing the frontier of computing applications, data, and services away from centralized nodes to the logical extremes of a network. It enables analytics and data gathering to occur at the source of the data. This approach requires leveraging resources that may not be continuously connected [1] to a network such as laptops, smartphones, tablets and sensors. The bottom line is this: cloud and edge are both necessary to industrial operations to gain

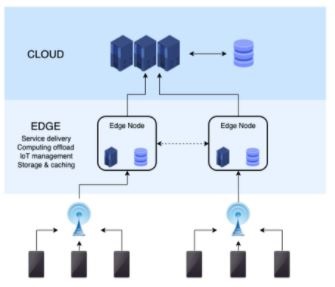

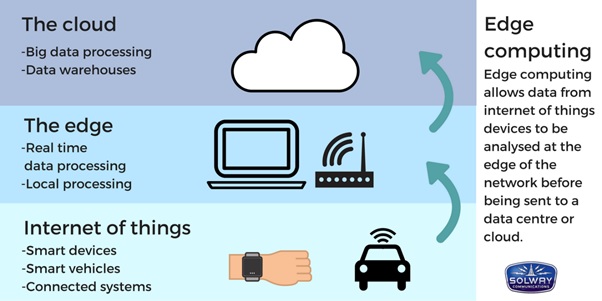

Edge computing is a distributed IT architecture which moves computing resources from clouds and data centers as close as possible to the originating source. The main goal of edge computing is to reduce latency requirements while processing data and saving network costs figure1.

Figure1: edge computing

The edge can be the router, ISP, routing switches, integrated access devices (IADs), multiplexers, etc. The most significant thing about this network edge is that it should be geographically close to the device.

Unlike the robotic arm on the space station, these robots are autonomous. That means they can work by themselves. They follow the commands people send. People use computers and powerful antennas to send messages to the spacecraft. The robots have antennas that receive the messages and transfer the commands telling them what to do into their computers. Then the robot will follow the commands.

Benefits of Edge Computing

Edge computing has emerged as one of the most effective solutions to network problems associated with moving huge volumes [2] of data generated in today’s world. Here are some of the most important benefits of edge computing:

1. Eliminates Latency

Latency refers to the time required to transfer data between two points on a network. Large physical distances between these two points coupled with network congestion can cause delays. As edge computing brings the points closer to each other, latency issues are virtually nonexistent.

2. Saves Bandwidth

Bandwidth refers to the rate at which data is transferred on a network. As all networks have a limited bandwidth, the volume of data that can be transferred and the number of devices that can process this is limited as well. [3] By deploying the data servers at the points where data is generated, edge computing allows many devices to operate over a much smaller and more efficient bandwidth.

3. Reduces Congestion

Although the Internet has evolved over the years, the volume of data being produced everyday across billions of devices can cause high levels of congestion. In edge computing, there is a local storage and local servers can perform essential edge analytics in the event of a network outage.

Drawbacks of Edge Computing

Although edge computing offers a number of benefits, it is still a fairly new technology and far from being foolproof. Here are some of the most significant drawbacks of edge computing:

1. Implementation Costs

The costs of implementing an edge infrastructure in an organization can be both complex and expensive. It requires a clear scope and purpose before deployment as well as additional equipment and resources to function.

2. Incomplete Data

Edge computing can only process partial sets of information which should be clearly defined during implementation. Due to this, companies may end up losing valuable data and information.

3. Security

Since edge computing is a distributed system, ensuring adequate security can be challenging. There are risks involved in processing [4] data outside the edge of the network. The addition of new IoT devices can also increase the opportunity for the attackers to infiltrate the device.

Examples and Use Cases

One of the best ways to implement edge computing is in smart home devices. In smart homes, a number of IoT devices collect data from around the house. The data is then sent to a remote server where it is stored and processed. This architecture can cause a number of problems in the event of a network outage. Edge computing can bring the data storage and processing centers close to the smart home and reduce backhaul costs and latency.

Another use case of edge computing is in the cloud gaming industry. Cloud gaming companies are looking to deploy their [5] servers as close to the gamers as possible. This will reduce lags and provide a fully immersive gaming experience figure 2.

Figure2: Cloud, edge, internet of things

References:

- https://www.ibm.com/cloud/what-is-edge-computing

- https://en.wikipedia.org/wiki/Edge_computing

- https://www.javatpoint.com/what-is-edge-computing

- https://www.simplilearn.com/what-is-edge-computing-article

- https://www.redhat.com/en/topics/edge-computing/what-is-multi-acces

Cite this article:

S. Nandhini Dwaraka (2021), Edge computing, AnaTechmaz, pp. 9