Efficient High-Performance Computing with Less Code

The Exo 2 programming language allows for the creation of reusable scheduling libraries independent of compilers. Typically, companies invest heavily in talent to develop high-performance libraries for AI systems, with NVIDIA leading the way in creating advanced HPC libraries that provide a competitive advantage. However, Exo 2 challenges this model by enabling a small team of students to develop HPC libraries with just a few hundred lines of code, potentially rivaling the performance of existing state-of-the-art solutions.

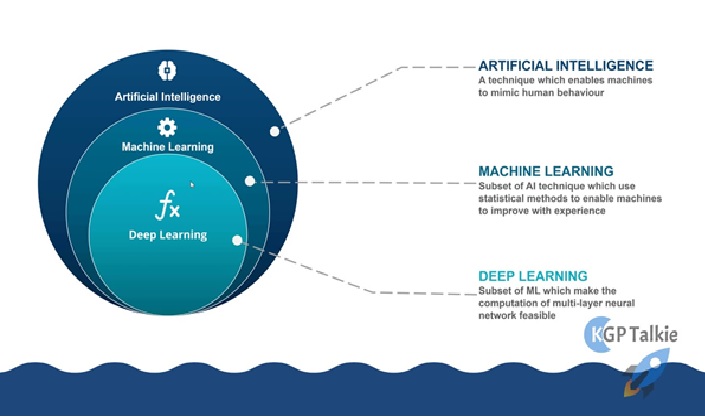

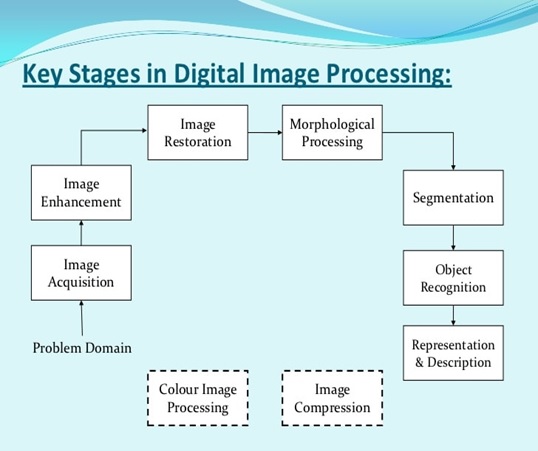

Figure 1. Efficient High-Performance Computing with Less Code

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced Exo 2, a programming language that falls under the category of "user-schedulable languages" (USLs), coined by MIT Professor Jonathan Ragan-Kelley. Unlike traditional programming languages, where compilers automatically generate the code, USLs empower programmers to manually create "schedules" that direct the compiler on how to generate more efficient code. This approach allows performance engineers to transform simple programs into highly optimized ones, achieving significantly faster results without changing the underlying computation. Figure 1 shows Efficient High-Performance Computing with Less Code.

Exo 2 overcomes a key limitation of previous user-schedulable languages (USLs), such as the original Exo, by allowing users to define new scheduling operations outside the compiler. This flexibility enables the creation of reusable scheduling libraries, streamlining the process. According to lead author Yuka Ikarashi, an MIT PhD student in electrical engineering and computer science, Exo 2 can reduce scheduling code by a factor of 100, while achieving performance on par with state-of-the-art implementations across multiple platforms, including Basic Linear Algebra Subprograms (BLAS) used in machine learning. This makes Exo 2 highly attractive for engineers in high-performance computing (HPC) working to optimize kernels across various operations, data types, and architectures.

Exo 2 offers a bottom-up approach to automation, allowing performance engineers and hardware implementers to write their own scheduling libraries for optimizing hardware performance. One of its key advantages is reducing coding effort by enabling the reuse of scheduling code across various applications and hardware targets. The researchers created a scheduling library of about 2,000 lines of code in Exo 2, consolidating optimizations for linear algebra and hardware-specific targets (AVX512, AVX2, Neon, and Gemmini accelerators). This library achieved performance comparable to or better than MKL, OpenBLAS, BLIS, and Halide. Exo 2 introduces a novel mechanism called "Cursors," providing a stable reference for pointing at object code during scheduling, ensuring the scheduling code remains independent of object-code transformations.

The team believes USLs should be user-extensible, allowing for the growth of the language through libraries that accommodate diverse optimization needs. Exo 2 enables performance engineers to focus on high-level optimizations while keeping the underlying object code functionally equivalent. The researchers aim to expand Exo 2's support for hardware accelerators like GPUs and improve compiler analysis in terms of correctness, compilation time, and expressivity. This research, co-authored by Ikarashi and Ragan-Kelley, was funded by DARPA, the U.S. National Science Foundation, and supported by various fellowships.

Source: MIT News

Cite this article:

Janani R (2025), Efficient High-Performance Computing with Less Code, AnaTechMaz, pp. 343