Overview of Hadoop Architecture

Apache Hadoop is an open source software framework used to develop data processing applications which are executed in a distributed computing environment.

Applications built using HADOOP are run on large data sets distributed across clusters of commodity computers. Commodity computers are cheap and widely available. These are mainly useful for achieving greater computational power at low cost. [2]

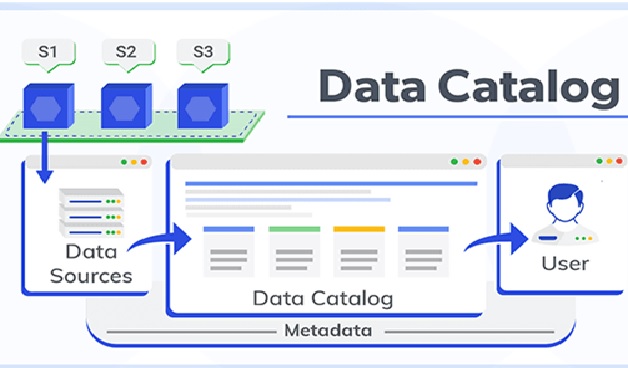

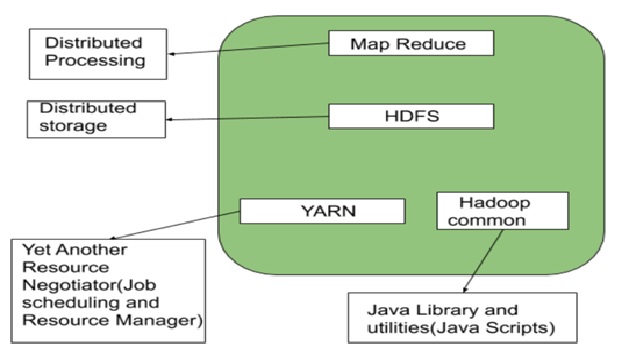

Figure 1. Overview of Hadoop Architecture

Figure 1 shows The Hadoop architecture is a package of the file system, MapReduce engine and the HDFS (Hadoop Distributed File System). The MapReduce engine can be MapReduce/MR1 or YARN/MR2.

A Hadoop cluster consists of a single master and multiple slave nodes. The master node includes Job Tracker, Task Tracker, NameNode, and DataNode whereas the slave node includes DataNode and TaskTracker. [3]

As we all know Hadoop is a framework written in Java that utilizes a large cluster of commodity hardware to maintain and store big size data. Hadoop works on MapReduce Programming Algorithm that was introduced by Google. Today lots of Big Brand Companys are using Hadoop in their Organization to deal with big data for eg. Facebook, Yahoo, Netflix, eBay, etc. [4]

Hadoop Architecture Overview

Apache Hadoop offers a scalable, flexible and reliable distributed computing big data framework for a cluster of systems with storage capacity and local computing power by leveraging commodity hardware. Hadoop follows a Master Slave architecture for the transformation and analysis of large datasets using Hadoop MapReduce paradigm.

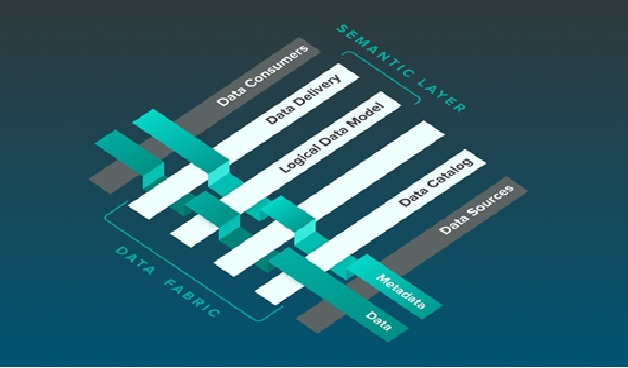

The components that play a vital role in the Hadoop architecture are -

- Hadoop Common– It contains libraries and utilities that other Hadoop modules require;

- Hadoop Distributed File System (HDFS)– A distributed file-system that stores data on commodity machines, providing very high aggregate bandwidth across the cluster; patterned after the UNIX file system and provides POSIX-like semantics.

- Hadoop YARN – Introduced in 2012, this is a platform that is in charge of managing computing resources in clusters and utilising them for planning users' applications.

- Hadoop MapReduce – This is an application of the MapReduce programming model for large-scale data processing. Both Hadoop's MapReduce and HDFS, were inspired by Google's papers on MapReduce and Google File System.

- Hadoop Ozone – This is a scalable, redundant, and distributed object store for Hadoop. This is a new addition (introduced in 2020) to the Hadoop family and unlike HDFS, it can handle both small and large files alike. [1]

References:

- https://www.projectpro.io/article/hadoop-architecture-explained-what-it-is-and-why-it-matters/317

- https://www.guru99.com/learn-hadoop-in-10-minutes.html

- https://www.javatpoint.com/what-is-hadoop

- https://www.geeksforgeeks.org/hadoop-architecture/

Cite this article:

Nandhinidwaraka S (2021) Overview of Hadoop Architecture, AnaTechMaz, pp. 31