IBM Lays Groundwork for Mainframe as The Ultimate AI Server

Upcoming mainframe upgrades, including multi-modal AI support, faster chips, and AI accelerators, promise significant advancements.

Figure 1. IBM Paves the Way for Mainframe as the Ultimate AI Server.

When IBM's next-generation z17 mainframe debuts this summer, it will introduce cutting-edge technologies designed to position Big Iron as the ultimate AI server. Figure 1 shows IBM Paves the Way for Mainframe as the Ultimate AI Server.

The latest IBM z and LinuxOne mainframes will incorporate the Telum II processor, Spyre AI accelerator, updated operating systems, and a suite of new software features tailored for AI inferencing and high-performance applications.

The Telum II processor boasts increased memory and cache capacity compared to its predecessor. It includes a specialized data processing unit (DPU) for IO acceleration and enhanced on-chip AI capabilities. Featuring eight high-performance cores running at 5.5GHz, Telum II offers a 40% boost in on-chip cache, with virtual L3 and L4 caches expanding to 360MB and 2.88GB, respectively.

Complementing the processor, IBM’s Spyre AI Accelerator will provide 1TB of memory and 32 AI accelerator cores, leveraging a similar architecture to Telum II’s integrated AI accelerator. IBM also notes that multiple Spyre Accelerators can be connected to significantly expand available AI acceleration capacity.

Today's mainframe is already a powerhouse, delivering unmatched transaction throughput and scalability. These strengths will now be extended into the AI era, according to Meredith Stowell, vice president of ecosystems for Z and LinuxONE, who spoke with Network World.

“Telum and Spyre, in particular, are going to take the mainframe to the next level,” Stowell said. Beyond accelerating traditional AI, the technology will enable large language models (LLMs) to run on the platform, creating what IBM calls a "multi-modal AI."

“That’s where you combine both traditional AI and LLMs simultaneously,” Stowell explained, “resulting in high performance and significantly improved accuracy for AI applications.”

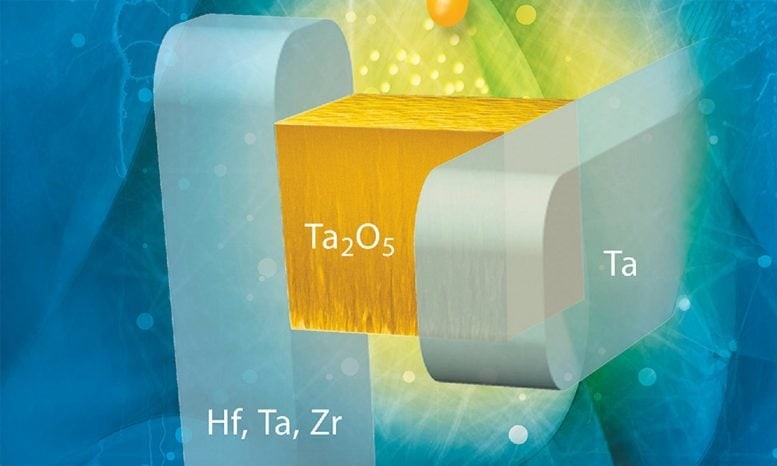

IBM’s Next-Generation Mainframe Processors

IBM’s upcoming mainframe processors will build on a legacy of continuous improvement, according to a new white paper on AI and the mainframe.

“They are projected to clock in at 5.5 GHz and include ten 36MB Level 2 caches,” IBM stated. “They’ll feature built-in low-latency data processing for accelerated I/O, along with a completely redesigned cache and chip-interconnection infrastructure to enhance on-chip cache and compute capacity.”

Current mainframes also incorporate extensions and accelerators that integrate seamlessly with core systems. These specialized add-ons are designed to support emerging technologies like Java, cloud computing, and AI by accelerating essential computing processes for high-volume, low-latency transaction processing.

Enhanced AI Acceleration for Mainframes

IBM’s next generation of AI accelerators will see major performance boosts, with each accelerator expected to deliver four times more compute power, reaching 24 trillion operations per second (TOPS), according to the company.

“The I/O and cache improvements will enable even faster processing and analysis of large datasets while consolidating workloads across multiple servers,” IBM stated. “This will lead to reduced data center space and power costs. Additionally, the new accelerators will provide greater capacity, allowing for enhanced in-transaction AI inferencing during each transaction cycle.”

The upcoming accelerator architecture is also designed for greater efficiency in AI workloads. Unlike traditional CPUs, the new chip layout simplifies data transfer between compute engines and supports lower-precision numeric formats, reducing both energy consumption and memory usage.

“These enhancements will allow mainframe users to run more complex AI models and perform AI inferencing at a scale previously unattainable,” IBM stated.

AI Inferencing and Hybrid Cloud Integration

While AI development has traditionally focused on model training, IBM’s mainframe is increasingly positioned to handle large-scale AI inferencing, meeting the growing needs of enterprises deploying AI in production environments.

“A lot of our clients were struggling to productionize AI because they couldn’t process as many transactions as they needed due to latency and limited processing power,” said Meredith Stowell, vice president of ecosystems for Z and LinuxONE. “That’s where Telum steps in, providing the capacity and power to accelerate inferencing.”

“Organizations may choose to train AI models in different environments—whether in a public cloud, private cloud, or on-premise,” Stowell explained. “Wherever they build and train these models, they can seamlessly transfer them to the mainframe for inferencing and deployment.”

IBM’s mainframe also supports multi-modal AI development, allowing it to process a variety of data types—audio, video, text, sensor data, and imaging—to create more sophisticated and intelligent AI models, Stowell noted.

IBM has been continuously enhancing its AI capabilities. In February, the company upgraded its Granite 3.2 large language model (LLM) system to expand multi-modal AI functions. Additionally, IBM’s watsonX development environment receives ongoing updates to improve AI-powered features.

Enterprise adoption of AI on mainframes is already progressing rapidly. IBM research found that 78% of IT executives surveyed are either piloting AI projects or operationalizing AI initiatives on mainframes.

Industry insights further reinforce this trend. In September, Kyndryl reported that AI and generative AI are set to transform the mainframe landscape—helping businesses extract insights from complex unstructured data, enhance efficiency, and improve decision-making. Additionally, generative AI has the potential to analyze and modernize legacy applications, offering enterprises new ways to optimize their IT infrastructure.

Source: NETWORK WORLD

Cite this article:

Priyadharshini S (2025), IBM Lays Groundwork for Mainframe as The Ultimate AI Server, AnaTechMaz, pp. 579