A technique to improve both fairness and accuracy in artificial intelligence

For employees who use machine learning models to make decisions, knowing when to trust a model’s predictions isn’t always an easy task, especially since these models are often so complex that their inner workings remain a mystery.

Users sometimes use a technique called selective regression, where the model estimates the confidence level for each prediction and rejects predictions when their confidence is too low. A human can then investigate those cases, gather additional information, and make a decision manually. [1]

Figure 1. Technique to improve both fairness and accuracy in artificial intelligence

Figure 1 shows however whereas selective regression has been proven to enhance the general efficiency of a mannequin, researchers at MIT and the MIT-IBM Watson AI Lab have found that the approach can have the other impact for underrepresented teams of individuals in a dataset.

As an example, a mannequin suggesting mortgage approvals may make fewer errors on common, however it could really make extra mistaken predictions for Black or feminine candidates. One motive this will happen is because of the truth that the mannequin’s confidence measure is educated utilizing overrepresented teams and will not be correct for these underrepresented teams. [2]

To predict or not to predict

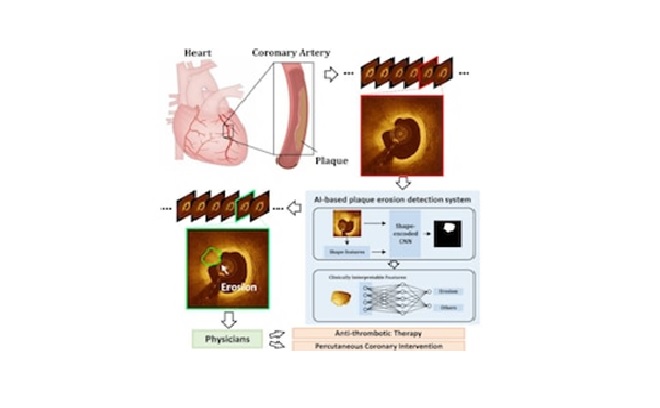

Regression is a technique that estimates the relationship between a dependent variable and independent variables. In machine learning, regression analysis is commonly used for prediction tasks, such as predicting the price of a home given its features (number of bedrooms, square footage, etc.) With selective regression, the machine-learning model can make one of two choices for each input — it can make a prediction or abstain from a prediction if it doesn’t have enough confidence in its decision. [3]

Focus on fairness

One algorithm guarantees that the features the model uses to make predictions contain all information about the sensitive attributes in the dataset, such as race and sex, that is relevant to the target variable of interest. Sensitive attributes are features that may not be used for decisions, often due to laws or organizational policies. The second algorithm employs a calibration technique to ensure the model makes the same prediction for an input, regardless of whether any sensitive attributes are added to that input. [4]

The researchers plan to apply their solutions to other applications, such as predicting house prices, student GPA, or loan interest rate, to see if the algorithms need to be calibrated for those tasks, says Shah. They also want to explore techniques that use less sensitive information during the model training process to avoid privacy issues. [4]

References:

- https://badpi.com/a-technique-to-improve-both-fairness-and-accuracy-in-artificial-intelligence/

- https://news8plus.com/a-technique-to-improve-both-fairness-and-accuracy-in-artificial-intelligence/

- https://news.mit.edu/2022/fairness-accuracy-ai-models-0720

- https://techxplore.com/news/2022-07-technique-fairness-accuracy-artificial-intelligence.html

Cite this article:

Thanusri swetha J (2022), A technique to improve both fairness and accuracy in artificial intelligence, AnaTechMaz, pp.133