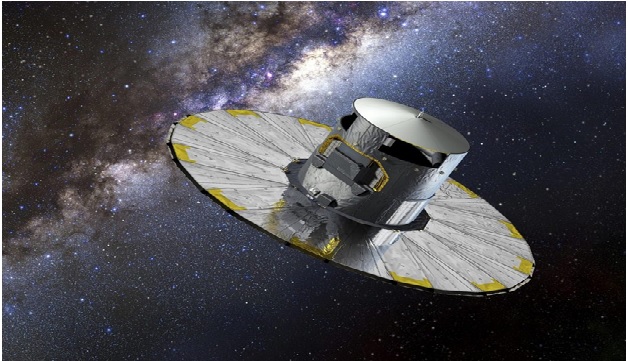

Eye imaging technology could help robots and cars see better

Even though robots don't have eyes with retinas, the key to helping them see and interact with the world more naturally and safely may rest in optical coherence tomography (OCT) machines commonly found in the offices of ophthalmologists. [1]

One of the imaging technologies that many robotics companies are integrating into their sensor packages is Light Detection and Ranging, or LiDAR for short. Currently commanding great attention and investment from self-driving car developers, the approach essentially works like radar, but instead of sending out broad radio waves and looking for reflections, it uses short pulses of light from lasers. [1]

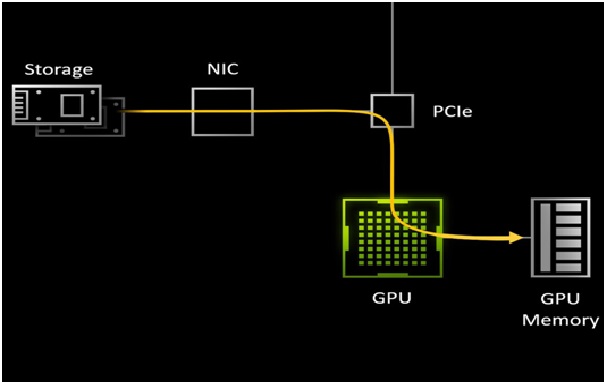

Figure 1. Eye imaging technology could help robots and cars see better

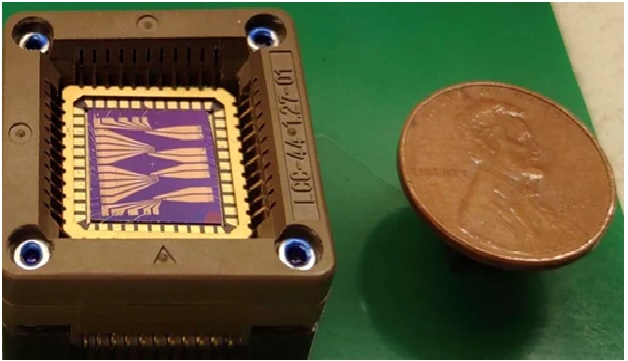

Figure 1 shows Traditional time-of-flight LiDAR, however, has many drawbacks that make it difficult to use in many 3D vision applications. Because it requires detection of very weak reflected light signals, other LiDAR systems or even ambient sunlight can easily overwhelm the detector. It also has limited depth resolution and can take a dangerously long time to densely scan a large area such as a highway or factory floor. To tackle these challenges, researchers are turning to a form of LiDAR called frequency-modulated continuous wave (FMCW) LiDAR. [2]

Whereas OCT units are used to profile microscopic buildings as much as a number of millimeters deep inside an object, robotic 3D imaginative and prescient methods solely have to find the surfaces of human-scale objects. To perform this, the researchers narrowed the vary of frequencies utilized by OCT, and solely appeared for the height sign generated from the surfaces of objects. This prices the system a little bit little bit of decision, however with a lot higher imaging vary and velocity than conventional LiDAR.

The result’s an FMCW LiDAR system that achieves submillimeter localization accuracy with data-throughput 25 occasions higher than earlier demonstrations. The outcomes present that the method is quick and correct sufficient to seize the small print of transferring human physique components — resembling a nodding head or a clenching hand — in real-time. [3]

“In a lot the identical method that digital cameras have change into ubiquitous, our imaginative and prescient is to develop a brand new technology of LiDAR-based 3D cameras that are quick and succesful sufficient to allow integration of 3D imaginative and prescient into all types of merchandise,” Izatt stated. “The world round us is 3D, so if we would like robots and different automated programs to work together with us naturally and safely, they want to have the ability to see us in addition to we will see them.” [4]

References:

- https://www.sciencedaily.com/releases/2022/03/220329114712.htm

- https://techilive.in/how-eye-imaging-technology-could-help-robots-and-cars-see-better/

- https://news.softpedia.uk/2022/03/30/how-eye-imaging-technology-could-help-robots-and-cars-see-better-sciencedaily/

- https://ilmiwap.com/how-eye-imaging-technology-could-help-robots-and-cars-see-better/

Cite this article:

Thanusri swetha J (2022), Eye imaging technology could help robots and cars see better, AnaTechMaz, pp. 111