Local Interpretable Model-agnostic Explanations (LIME)

LIME stands for "Local Interpretable Model-agnostic Explanations". It is a technique used in machine learning to help explain the predictions made by a model on individual data instances.

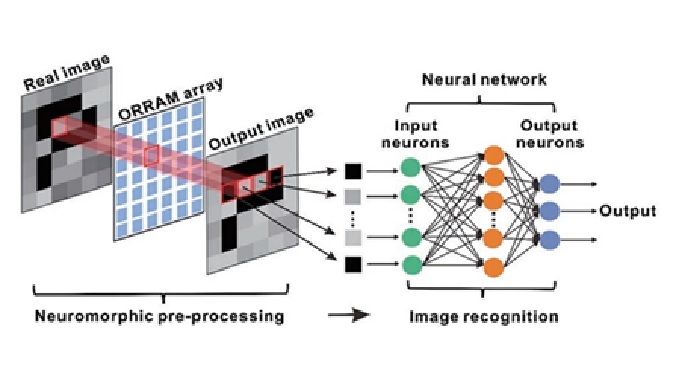

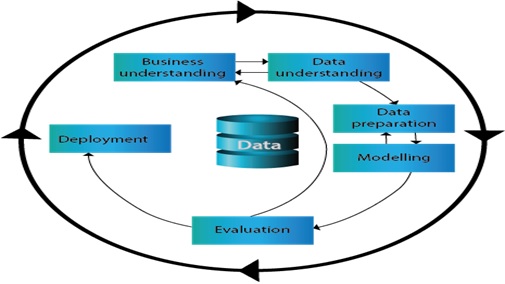

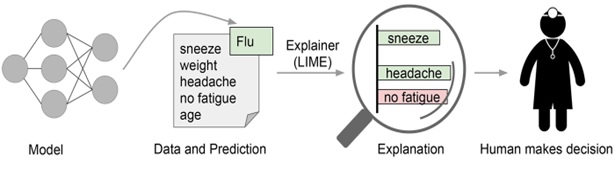

Figure 1. LIME [1]

Figure 1 shows the process of LIME. LIME creates local models around specific instances to understand how a model arrived at its prediction. It generates an explanation by approximating the behavior of the model around the instance of interest. It does so by training a local linear model to approximate the predictions made by the original model for that specific instance. The features that are important for the local linear model are highlighted and presented as the explanation.

The goal of LIME is to provide an explanation that is both interpretable to humans and reflects the reasoning of the model. It is a model-agnostic technique, meaning that it can be applied to any machine learning model regardless of the algorithm used to train it. LIME has been successfully used in various applications, including image classification, natural language processing, and healthcare.

Why is LIME Important?

For humans to trust AI systems, it is essential for models to be explainable to users. AI interpretability reveals what is happening within these systems and helps identify potential issues such as information leakage, model bias, robustness, and causality. LIME offers a generic framework to uncover black boxes and provides the “why” behind AI-generated predictions or recommendations. [2]

How C3 AI Helps Organizations Compute LIME

The C3 AI Platform leverages LIME in two interpretability frameworks integrated in ML Studio: ELI5 (Explain Like I’m 5) and SHAP (Shapley Additive exPlanations). Both techniques can be configured on ML Pipelines, C3 AI’s low-code, lightweight interface for configuring multi-step machine learning models. Data scientists use these techniques during the development stage to ensure models are fair, unbiased, and robust; C3 AI’s customers use them during the production stage to spell out additional insights and facilitate user adoption. [2]

References:

- https://www.oreilly.com/content/introduction-to-local-interpretable-model-agnostic-explanations-lime/

- https://c3.ai/glossary/data-science/lime-local-interpretable-model-agnostic-explanations/

Cite this article:

Hana M (2023), Local Interpretable Model-agnostic Explanations (LIME), AnaTechMaz, pp.75