Pre-trained Language Models

The intuition behind pre-trained language models is to create a black box which understands the language and can then be asked to do any specific task in that language. The idea is to create the machine equivalent of a ‘well-read’ human being.[1]

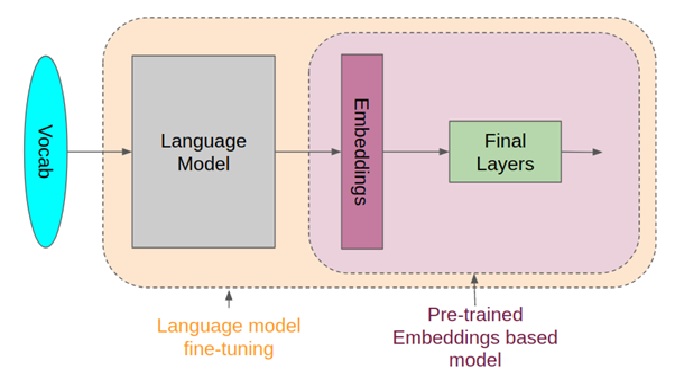

Figure 1. Pre-trained Language Models

Pre-trained Language Models is shown in figure 1. Pre-trained models (PTMs) for NLP are deep learning models (such as transformers) which are trained on a large dataset to perform specific NLP tasks. PTMs when trained on the large corpus can learn universal language representations, which can be beneficial for downstream NLP tasks and can avoid training a new model from scratch. That way, pre-trained models can be termed reusable NLP models which NLP developers can use to quickly build an NLP application.[2]

Some real-world and most popular examples where pre-trained NLP models are getting used are the following:

- Named Entity Recognition (NER)

- Sentiment Analysis

- Machine Translation

- Text Summarization

- Natural Language Generation

- Speech Recognition

- Content Moderation

- Automated Question Answering Systems [3]

Pre-trained language models such as BERT, GPT, and XLNet have become very popular in NLP. These models are trained on large amounts of data and can be fine-tuned for specific tasks such as sentiment analysis, text classification, and question answering.

In conclusion, pre-trained language models are a powerful tool for NLP and data science, and they are likely to continue to be an important area of research and development. However, it is important to be aware of their limitations and potential biases, and to use them in combination with other NLP techniques to ensure accurate and reliable results.

References:

- https://towardsdatascience.com/pre-trained-language-models-simplified-b8ec80c62217

- https://vitalflux.com/nlp-pre-trained-models-explained-with-examples/

- https://vitalflux.com/nlp-pre-trained-models-explained-with-examples/

Cite this article:

Gokula Nandhini K (2023), Pre-trained Language Models, AnaTechMaz, pp.64