New Model Generates Audio and Music Tracks from Various Data Inputs

In recent years, computer scientists have developed a range of high-performing machine learning tools capable of generating text, images, videos, music, and other types of content. Most of these models are designed to produce content based on text-based instructions from users.

Researchers at the Hong Kong University of Science and Technology recently unveiled AudioX, a model capable of generating high-quality audio and music tracks using text, video footage, images, music, and audio recordings as inputs. Their model, introduced in a paper published on the arXiv preprint server, utilizes a diffusion transformer—an advanced machine learning algorithm that leverages transformer architecture to generate content by progressively de-noising the input data it receives.

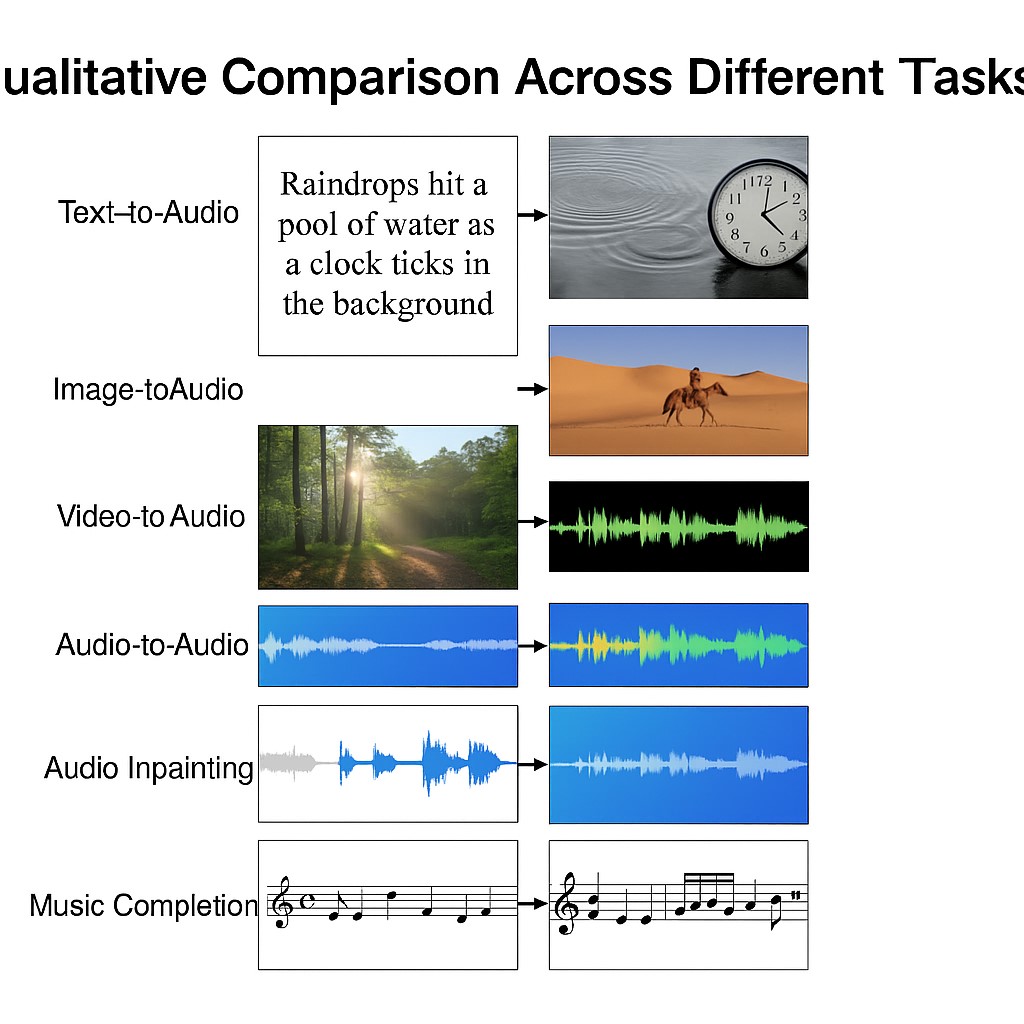

Figure 1. Qualitative Comparison of Different Tasks

"Our research originates from a fundamental question in artificial intelligence: how can intelligent systems achieve unified cross-modal understanding and generation?" Wei Xue, the corresponding author of the paper, shared with Tech Xplore. "Human creativity is a seamlessly integrated process, where information from different sensory channels is naturally fused by the brain [1]. Traditional systems have often relied on specialized models, which fail to capture and merge the intrinsic connections between modalities." Figure 1 shows Qualitative Comparison of Different Tasks.

The primary objective of the recent study led by Wei Xue, Yike Guo, and their colleagues was to develop a unified representation learning framework. This framework enables a single model to process information across various modalities (such as text, images, videos, and audio tracks), eliminating the need for combining separate models that are restricted to processing specific types of data.

"We aim to enable AI systems to form cross-modal concept networks similar to the human brain," said Xue. "AudioX, the model we developed, represents a paradigm shift, designed to tackle the dual challenge of conceptual and temporal alignment. In other words, it addresses both the 'what' (conceptual alignment) and 'when' (temporal alignment) questions simultaneously. Our ultimate goal is to build world models capable of predicting and generating multimodal sequences that stay consistent with reality."

The new diffusion transformer-based model developed by the researchers can generate high-quality audio or music tracks using any input data as guidance. This ability to transform "anything" into audio unlocks new possibilities for the entertainment industry and creative professions. For instance, it allows users to create music that aligns with a specific visual scene or to use a combination of inputs (such as text and video) to guide the generation of desired tracks.

"AudioX is built on a diffusion transformer architecture, but what distinguishes it is the multi-modal masking strategy," explained Xue. "This strategy fundamentally reimagines how machines learn to understand the relationships between different types of information.

"By obscuring elements across input modalities during training—such as selectively removing patches from video frames, tokens from text, or segments from audio—and training the model to recover the missing information from other modalities, we create a unified representation space."

AudioX is one of the first models to combine linguistic descriptions, visual scenes, and audio patterns, capturing both the semantic meaning and rhythmic structure of this multi-modal data. Its unique design allows it to establish associations between different types of data, much like how the human brain integrates information from various senses (e.g., vision, hearing, taste, smell, and touch).

"AudioX is, by far, the most comprehensive any-to-audio foundation model, with several key advantages," said Xue. "Firstly, it is a unified framework that supports a wide range of tasks within a single model architecture. It also enables cross-modal integration through our multi-modal masked training strategy, creating a unified representation space. Additionally, it boasts versatile generation capabilities, capable of handling both general audio and music with high quality, trained on large-scale datasets, including our newly curated collections."

In initial tests, the new model developed by Xue and his colleagues was found to produce high-quality audio and music tracks, successfully integrating text, video, images, and audio [2]. Its most remarkable feature is that it does not rely on combining different models, but instead uses a single diffusion transformer to process and integrate various types of inputs.

"AudioX supports a wide range of tasks within one architecture, from text/video-to-audio to audio inpainting and music completion, advancing beyond systems that typically excel at only specific tasks," said Xue. "The model has numerous potential applications, spanning across film production, content creation, and gaming."

AudioX could soon be further improved and deployed in a variety of settings. For example, it could assist creative professionals in the production of films, animations, and social media content.

"Imagine a filmmaker no longer needing a Foley artist for every scene," explained Xue. "AudioX could automatically generate sounds like footsteps in snow, creaking doors, or rustling leaves based solely on the visual footage. Similarly, it could be used by influencers to instantly add the perfect background music to their TikTok dance videos or by YouTubers to enhance their travel vlogs with authentic local soundscapes—all generated on-demand."

In the future, AudioX could also be employed by video game developers to create immersive and adaptive games, where background sounds dynamically change based on player actions. For instance, as a character transitions from a concrete floor to grass, the sound of their footsteps could shift, or the game's soundtrack could gradually intensify as they approach a threat or enemy.

"Our next planned steps include extending AudioX to long-form audio generation," added Xue. "Additionally, instead of merely learning associations from multimodal data, we aim to integrate human aesthetic understanding within a reinforcement learning framework to better align with subjective preferences."

References:

- https://www.stardrive.org/index.php/sd-science-news/71464-new-model-can-generate-audio-and-music-tracks-from-diverse-data-inputs

- https://techxplore.com/news/2025-04-generate-audio-music-tracks-diverse.html#google_vignette

Cite this article:

Janani R (2025), New Model Generates Audio and Music Tracks from Various Data Inputs, AnaTechMaz, pp.132