Meta Accused of Using Pirated Books for AI—U.S. Courts to Rule on 'Fair Use'

The Atlantic recently claimed that Meta, the parent company of Facebook and Instagram, used LibGen—an illegal book repository—to train its generative AI. Founded in 2008 by Russian scientists, LibGen hosts over 7.5 million books and 81 million research papers, making it one of the largest sources of pirated content online.

Training AI on copyrighted material has ignited legal debates and concerns among writers and publishers, who fear devaluation or replacement of their work. While some companies, like OpenAI, have secured formal partnerships with content providers, many publishers and authors oppose the use of their intellectual property without consent or compensation.

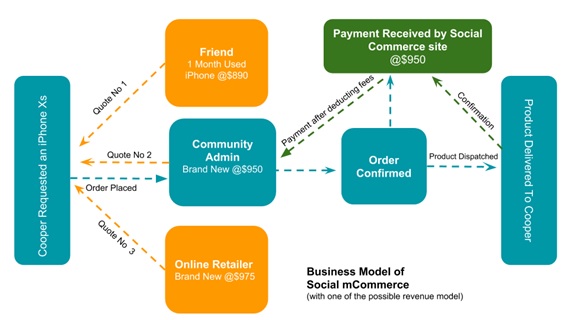

Figure 1. Meta accused of using pirated books to train AI—Fair use debate

Author Tracey Spicer criticized Meta's use of copyrighted books as "peak technocapitalism," while Sophie Cunningham, chair of the Australian Society of Authors, accused the company of showing contempt for writers. Figure 1 shows Meta accused of using pirated books to train AI—Fair use debate.

Meta faces a U.S. copyright infringement lawsuit from authors including Michael Chabon, Ta-Nehisi Coates, and Sarah Silverman. Court documents from January allege CEO Mark Zuckerberg approved using the LibGen dataset, despite knowing it contained pirated material [1]. Meta has declined to comment on the case.

The legal disputes focus on a key question: does large-scale data scraping for AI training qualify as "fair use"?

Legal Battles

The legal stakes are high as AI companies train models on publicly available data, using it to generate chatbot responses that may compete with original content creators. AI firms justify data scraping under the "fair use" doctrine, which allows unlicensed use of copyrighted material for research, teaching, and commentary. However, concerns arise when AI-generated outputs closely mimic an author's style or reproduce substantial portions of copyrighted works.

A pivotal case in this debate is The New York Times vs. OpenAI and Microsoft, which alleges unauthorized use of millions of articles for AI training. While the lawsuit has been narrowed to core copyright and trademark claims, a recent court ruling allowing it to proceed is seen as a victory for The New York Times.

Beyond news publishers like News Corp, individual creators are also challenging AI companies. In 2023, authors including Jonathan Franzen, John Grisham, and George R.R. Martin filed a class-action lawsuit against OpenAI, alleging unauthorized copying of their works—a case that remains unresolved.

Broader Impact

Ongoing legal battles will shape the future of publishing, media, and AI industries. The issue is pressing, as authors already face financial struggles—earning a median income of just over $20,000 in the U.S. and even less in Australia.

In response, the Australian Society of Authors (ASA) is advocating for AI regulation, urging companies to obtain permission before using copyrighted works and to fairly compensate writers. The ASA also calls for transparency in AI training data and clear labeling of AI-generated content.

A key question remains: if AI training on copyrighted material is allowed, what compensation is fair? In 2024, HarperCollins struck a deal allowing AI training on select nonfiction books, offering authors a $2,500 opt-in payment split 50/50 with publishers. However, the Authors Guild argues that writers should receive 75% of the compensation instead.

Possible Responses

Publishers and creators worry about losing control over intellectual property, as AI systems rarely cite sources, reducing the value of attribution. If AI-generated content can replace published works, demand for original material may decline.

With AI-generated content flooding platforms like Amazon, distinguishing and protecting authentic works is becoming increasingly difficult. Lawmakers worldwide are exploring copyright updates to balance innovation with intellectual property rights, but their approaches vary.

The European Union’s 2024 Artificial Intelligence Act introduces copyright provisions to help rights holders identify infringements, though they remain relatively weak. Meanwhile, US Vice President JD Vance opposes AI regulation, calling it "authoritarian censorship" that stifles innovation.

US tech giants like OpenAI and Google advocate for broad AI training rights under the "fair use" doctrine, arguing that unrestricted access to copyrighted material supports learning and progress. However, this stance raises major concerns for content creators.

Agreement or Dispute?

Efforts are underway worldwide to establish models that ensure fair compensation for creators and publishers while allowing AI companies to access data for training. Since mid-2023, several academic publishers, including Informa, Wiley, and Oxford University Press, have signed licensing agreements with AI firms. Similarly, some publishers, like Black Inc. in Australia, have introduced opt-in agreements for authors to permit AI training on their work.

New licensing platforms, such as Created by Humans, are emerging to facilitate legal AI training while distinguishing human-written content from AI-generated material [2]. Meanwhile, the Australian government has yet to introduce specific AI regulations but has implemented voluntary AI Ethics Principles emphasizing transparency, accountability, and fairness.

The legality of using copyrighted works for AI training remains a contentious issue, requiring a balance between technological advancement and fair compensation for original content. Courts' rulings on ongoing cases may help define fair use boundaries and appropriate compensation models, shaping the future relationship between AI development and human creativity.

References:

- https://au.news.yahoo.com/meta-allegedly-used-pirated-books-013905987.html

- https://techxplore.com/news/2025-04-meta-allegedly-pirated-ai-courts.html

Cite this article:

Janani R (2025), Meta Accused of Using Pirated Books for AI—U.S. Courts to Rule on 'Fair Use', AnaTechMaz, pp.79