Adaptive AI: Empowering Machines to Conquer Uncertainty in the Real World

The researchers from MIT and Technion have developed an algorithm that combines imitation learning and reinforcement learning to train machines more effectively. Imitation learning involves the machine mimicking the behavior of a teacher, while reinforcement learning involves the machine learning through trial and error. [1]

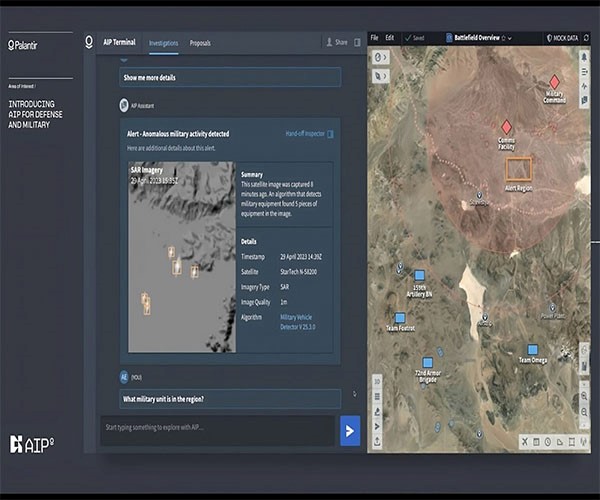

Figure 1. Teacher student learning method in ML.

Figure 1 shows teacher student learning method in ML. The algorithm they have developed dynamically determines when the machine should imitate the teacher and when it should explore on its own. It allows the machine to diverge from copying the teacher when the teacher's performance is either too good or not good enough. The machine can then return to following the teacher later in the training process if it would lead to better results and faster learning. [1]

By combining both types of learning, the researchers found that their approach enabled the machines to learn tasks more effectively compared to methods that used only one type of learning. [1]

This dynamic learning approach has potential applications in training machines for real-world situations with uncertainties, such as training a robot to navigate inside an unfamiliar building. By adapting its learning strategy based on the teacher's performance, the machine can improve its training process and become more adept at handling uncertain and unfamiliar situations. [1]

The research by Shenfeld, Hong, Tamar, and Agrawal will be presented at the International Conference on Machine Learning. [1]

Striking a balance

The researchers proposed a novel approach to balancing imitation learning and reinforcement learning by training two students. The first student learns with a weighted combination of reinforcement learning and imitation learning, while the second student learns solely through reinforcement learning. [1]

The algorithm continuously compares the performance of the two students. If the first student, which incorporates imitation learning, outperforms the second student, the algorithm increases the weight on imitation learning for training the first student. Conversely, if the second student, relying only on reinforcement learning, starts achieving better results, the algorithm focuses more on reinforcement learning for the first student. [1]

This dynamic comparison and adjustment allow the algorithm to adaptively determine which learning method produces superior outcomes at different stages of the training process. Unlike brute-force methods that require repeated iterations to find an optimal balance, this approach dynamically selects the most effective technique throughout training. [1] [1]

As a result, this adaptive algorithm proves to be more efficient in teaching the students compared to non-adaptive methods. By automatically adjusting the weighting of imitation learning and reinforcement learning objectives, the algorithm optimizes the learning process and enhances the overall performance of the students. [1][1]

According to Shenfeld, this innovation contributes to the algorithm's ability to effectively teach students in a more adaptive and flexible manner compared to previous approaches. [1]

Solving tough problems

The researchers conducted several simulated teacher-student training experiments to test their approach. One experiment involved navigating a maze of lava, where the teacher had access to the full map while the student could only see a limited patch. The algorithm achieved an almost perfect success rate in all testing environments and demonstrated faster learning compared to other methods. [1]

In a more challenging simulation, a robotic hand with touch sensors but no vision had to reorient a pen to the correct pose. The teacher had knowledge of the pen's actual orientation, while the student relied solely on touch sensors. The researchers' method outperformed other approaches that used only imitation learning or only reinforcement learning. [1]

These experiments showcase the potential of teacher-student learning in training robots for complex object manipulation and locomotion tasks. The algorithm's success in simulations lays the groundwork for transferring these learned skills to real-world scenarios. The Improbable AI lab envisions applying these techniques to future home robots capable of performing various manipulation tasks. [1]

Teacher-student learning has been effective in training robots by leveraging the privileged information available to the teacher in simulations. However, when the student is deployed in the real world, it lacks access to such detailed information. The researchers believe their algorithm can improve performance across different applications where imitation learning or reinforcement learning is utilized. [1]

One potential application is using large language models like GPT-4 as teachers to train smaller student models to excel in specific tasks. Additionally, analyzing the similarities and differences between machine learning from teachers and humans learning from their own teachers could lead to improvements in the learning experience. [1]

Overall, the researchers' algorithm shows promise in enhancing machine learning techniques and can be applied to various domains to achieve better performance and more effective learning. [1]

Source: EurekAlert

References:

- https://www.eurekalert.org/news-releases/990932

Cite this article:

Hana M (2023), Adaptive AI: Empowering Machines to Conquer Uncertainty in the Real World, AnaTechMaz, pp.274