New Hardware Approach Faster Computation for AI with Less Energy

A multidisciplinary team of MIT researchers, the amount of time, effort, and money needed to train ever-more-complex neural network models are soaring as researchers push the limits of machine learning. Researches found analogue deep learning, a new branch of artificial intelligence, promises faster computation with less energy consumption.

Figure 1: Analogue deep learningpromises faster computation.

Figure 1 shows thatProgrammable resistors are the key building blocks in analog deep learning, just like transistors are the core elements for digital processors. By repeating arrays of programmable resistors in complex layers, researchers can create a network of analogue artificial "neurons" and "synapses" that execute computations just like a digital neural network.

This network can then be trained to achieve complex AI tasks like image recognition and natural language processing. [1]

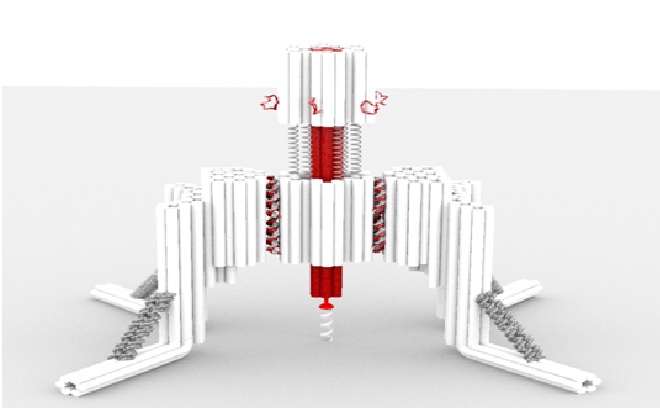

Researchers set out to push the speed limits of a type of human-made analogue synapse that they had previously developed. They utilized a practical inorganic material in the fabrication process that enables their devices to run 1 million times faster than previous versions, which is also about 1 million times faster than the synapses in the human brain.

This inorganic material also makes the resistor extremely energy-efficient. Unlike materials used in the earlier version of their device, the new material is compatible with silicon fabrication techniques. This change has enabled fabricating devices at the nanometer scale and could pave the way for integration into commercial computing hardware for deep-learning applications.

“With that key insight, and the very powerful nanofabrication techniques we have at MIT. Nano, we have been able to put these pieces together and demonstrate that these devices are intrinsically very fast and operate with reasonable voltages,” said Jesus A. del Alamo. [2]

“The working mechanism of the device is the electrochemical insertion of the smallest ion, the proton, into an insulating oxide to modulate its electronic conductivity. Because we are working with very thin devices, we could accelerate the motion of this ion by using a strong electric field and push these ionic devices to the nanosecond operation regime,” explained Bilge Yildiz, the Breene M. Kerr.

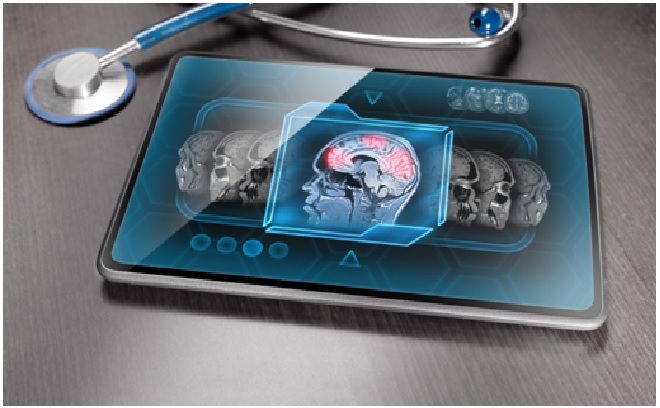

These programmable resistors vastly increase the speed at which a neural network is trained, while drastically reducing the cost and energy to perform that training. This could help scientists develop deep learning models much more quickly, which could then be applied in uses like self-driving cars, fraud detection, or medical image analysis. [3]

References:

- https://www.bignewsnetwork.com/news/272635944/study-finds-new-hardware-offers-faster-computation-for-artificial-intelligence-with-much-less-energy

- https://aljazeera.co.in/politics/study-finds-new-hardware-offers-faster-computation-for-artificial-intelligence-with-much-less-energy/

- https://theprint.in/science/study-finds-new-hardware-offers-faster-computation-for-artificial-intelligence-with-much-less-energy/1059882/

Cite this article:

Sri Vasagi K (2022), New Hardware Approach Faster Computation for AI with Less Energy, AnaTechMaz, pp.138