Simulated human eye movement aims to train metaverse platforms

U.S. National Science Foundation grantee computer engineers based at Duke University have developed virtual eyes that simulate how humans look at the world. The virtual eyes are accurate enough for companies to train virtual reality and augmented reality applications.

"The aims of the project are to provide improved mobile augmented reality by using the Internet of Things to source additional information, and to make mobile augmented reality more reliable and accessible for real-world applications," said Prabhakaran Balakrishnan, a program director in NSF's Division of Information and Intelligent Systems. [1]

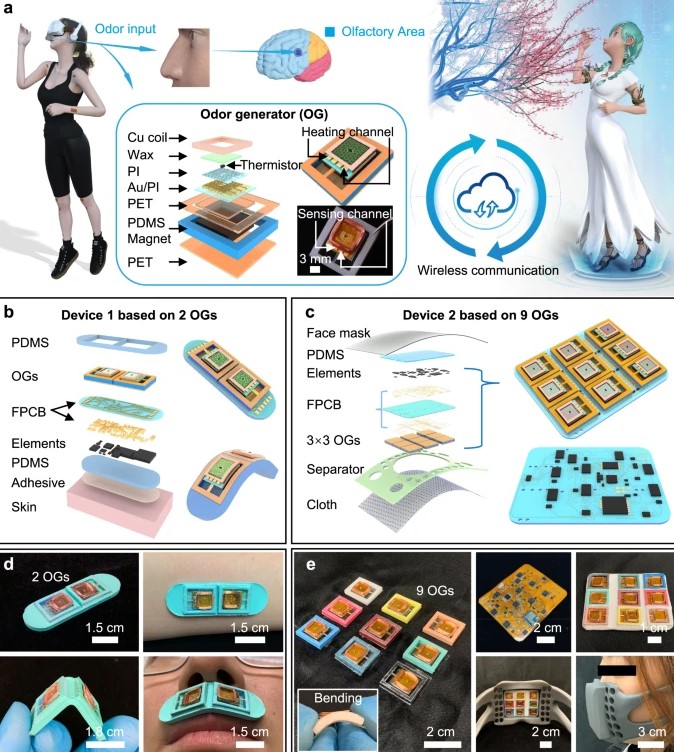

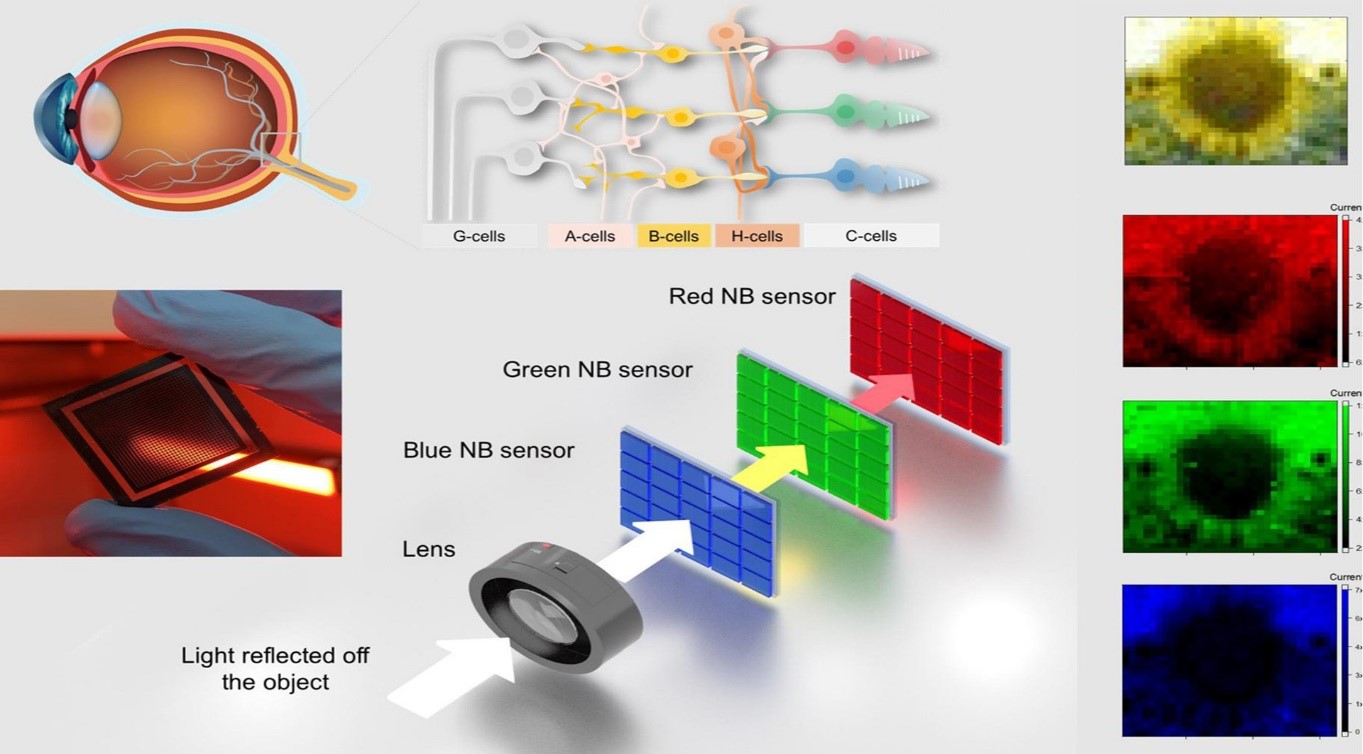

Figure 1. Simulated human eye movement aims to train metaverse platforms

Figure 1 shows eye tracking is a sensor technology that can detect a person’s presence and follow what they are looking at in real-time. The technology converts eye movements into a data stream that contains information such as pupil position, the gaze vector for each eye, and gaze point. Essentially, the technology decodes eye movements and translates them into insights that can be used in a wide range of applications or as an additional input modality. It is used in medicine, military, neuromarketing applications etc. [2]

To test the accuracy of their synthetic eyes, the researchers turned to publicly available data. They first had the eyes "watch" videos of Dr. Anthony Fauci addressing the media during press conferences and compared it to data from the eye movements of actual viewers. They also compared a virtual dataset of their synthetic eyes looking at art with actual datasets collected from people browsing a virtual art museum. The results showed that EyeSyn was able to closely match the distinct patterns of actual gaze signals and simulate the different ways different people's eyes react. [3]

The new technology is termedEyeSyn and it is designed to assist developers with creating applications for the rapidly expanding metaverse. A secondary aspect is with the protection of user data.

The technology is based on an assessment of the tiny movements of how a person’s eyes move and their pupils dilate. Gathering data on this can provide a large amount of information.

For example, human eyes can reveal whether a person is bored or excited, where concentration is focused, whether or not they are an expert or novice at a given task, or even if they are fluent in a specific language. [4]

References:

- https://beta.nsf.gov/news/simulated-human-eye-movement-aims-train-metaverse-platforms

- https://shasthrasnehi.com/moving-metaverse-to-the-next-level-simulated-human-eye-movement/

- https://www.sciencedaily.com/releases/2022/03/220307132000.htm

- https://www.digitaljournal.com/tech-science/staring-into-the-metaverse-improving-how-we-see-the-virtual-world/article

Cite this article:

Thanusri swetha J (2022), Simulated human eye movement aims to train metaverse platforms, AnaTechMaz, pp.183