TPUs - Google’s Custom AI Hardware

Tensor Processing Units (TPUs) are Google's custom-designed chips created specifically for accelerating machine learning workloads. This blog post delves into the architecture, benefits, and applications of TPUs, illustrating why they are a game-changer in the field of AI.

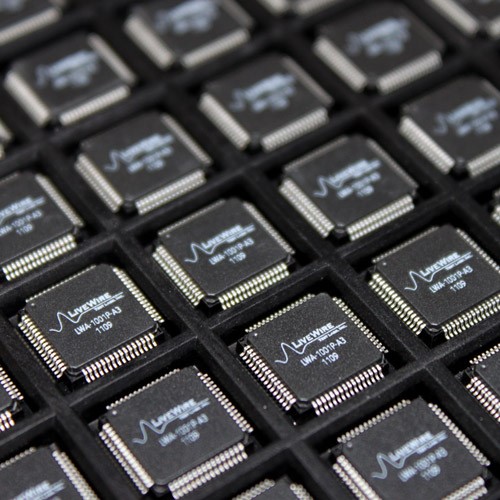

Figure 1. TPU.

Purpose of TPUs

TPUs were developed to address the limitations of existing hardware in handling the specific demands of machine learning tasks. While GPUs offered significant improvements over CPUs, Google identified opportunities for further optimization, particularly for tensor operations used in neural networks [1]. Figure 1 shows TPU.

Key Developments

- 2015: Google announced the first TPU designed to accelerate TensorFlow applications.

- Evolution: Continuous improvements and iterations, leading to TPU v2, TPU v3, and TPU v4, each offering enhanced performance and capabilities.

TPU Architecture

TPUs are specialized for the high computational demands of deep learning, particularly for matrix and tensor operations.

Key Architectural Features

- Matrix Multiplication Units (MXUs): Hardware units optimized for the high-speed multiplication of matrices, which is a fundamental operation in neural networks.

- Large On-chip Memory: High-bandwidth memory close to the computation units to reduce latency and increase throughput.

- Quantization Support: Efficient handling of lower-precision data types (e.g., 8-bit integers) to improve performance and reduce power consumption without significantly sacrificing accuracy.

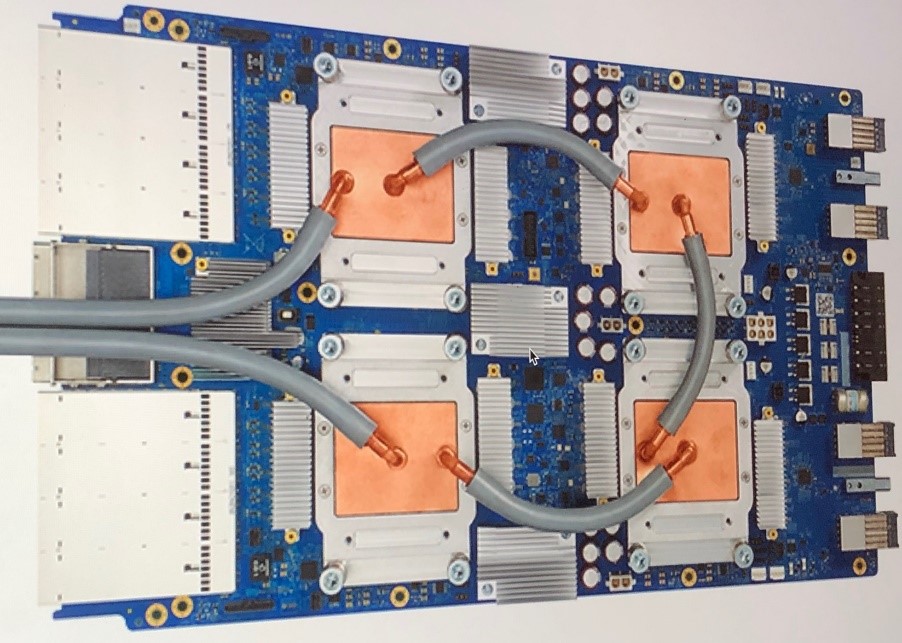

- Scalability: Designed to work in pods, allowing for massive scaling of computational resources to handle large and complex models.

Differences Between TPUs and GPUs

While both TPUs and GPUs are used for accelerating AI workloads, TPUs offer specific advantages for TensorFlow-based applications.

Key Differences

- Optimization: TPUs are highly optimized for TensorFlow operations, providing higher efficiency for these specific workloads compared to GPUs.

- Ease of Use: Integration with Google Cloud makes it easier for developers to access and deploy TPUs without needing to manage hardware.

- Performance: For certain tensor operations, TPUs can outperform GPUs due to their specialized architecture.

Integration with Google Cloud

TPUs are accessible through Google Cloud, offering developers powerful tools to train and deploy machine learning models.

Key Features

- Cloud TPU Services: Access to TPU resources on-demand via Google Cloud, making it scalable and cost-effective.

- TPU Pods: Groups of TPUs connected to work together, enabling the training of large models with high efficiency.

- TensorFlow Compatibility: Seamless integration with TensorFlow, making it easier for developers already using this framework.

Major Applications

TPUs are used in a wide range of applications, from research to real-time processing tasks.

Key Applications

Deep Learning Training: Accelerating the training process of large neural networks, significantly reducing time to insight.

Real-time Inference: Handling inference tasks for applications requiring low latency, such as voice recognition, image processing, and natural language processing.

Large-scale AI Models: Enabling the development and deployment of large models that would be infeasible with other hardware due to their computational demands.

Research and Development: Facilitating advancements in AI research by providing researchers with powerful tools to experiment with complex models and large datasets.

Advantages and Limitations of TPUs

Advantages

Performance: High efficiency and speed for TensorFlow-based operations.

Scalability: Easily scalable via Google Cloud, enabling the handling of very large and complex models.

Cost-Effectiveness: Access to high-performance hardware on-demand without the need for significant upfront investment in physical infrastructure.

Limitations

Specialization: TPUs are highly specialized for TensorFlow, which may limit their utility for other machine learning frameworks.

Accessibility: Primarily available through Google Cloud, which may not be ideal for all users or use cases.

Conclusion

Google's TPUs represent a significant advancement in AI hardware, providing tailored solutions for the specific demands of machine learning tasks. Their specialized architecture, seamless integration with Google Cloud, and superior performance for TensorFlow operations make them a valuable tool for researchers and developers.

References:

- https://en.wikipedia.org/wiki/Tensor_Processing_Unit

Cite this article:

Hana M (2024), Decoding AI Hardware: A Comprehensive Guide to GPUs, TPUs, FPGAs, and ASICs, AnaTechmaz, pp. 2