Nvidia Presents Liquid-Cooled Graphics Chip for Data Centre

Nvidia has announced its new plan to reduce the power consumption of data centres that process huge amounts of data or train AI models: liquid-cooled graphics cards.The company announced,Computer that it is introducing a liquid-cooled version of its A100 compute board, and claims that it consumes 30% less power than the air-cooled version.

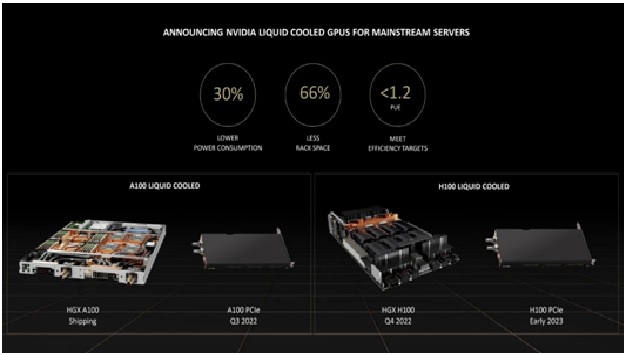

Figure 1: Nividia liquid cooled GPUs for mainstream service.

Figure 1 shows that Nvidia is also committed to not being a one-piece, it already has more liquid-cooled server boards on its roadmap and suggests bringing the technology to other applications such than embedded systems that need to stay cool in enclosed spaces.

Nvidia says reducing the energy needed to perform complex calculations could have a big impact – the company says data centre use more than one percent of the world’s electricity, and 40 percent of that is due on cooling.

Reducing that by nearly a third would be a big deal, storage, and network equipment also consume power and also need to be cooled. Nvidia’s claim is that with liquid cooling, GPU-accelerated systems would be much more efficient than CPU-only servers on AI and other high-performance tasks. [1]

NVIDIA adds to its sustainability efforts with the release of our first data centre PCIe GPU using direct-chip cooling. Equinix is qualifying the A100 80GB PCIe Liquid-Cooled GPU for use in its data centres as part of a comprehensive approach to sustainable cooling and heat capture. The GPUs are sampling now and will be generally available this summer.

Datacentre operators aim to liquid cooling promises systems that recycle small amounts of fluids in closed systems focused on key hot spots.Liquid-cooled data centres can pack twice as much computing into the same space, too. [2]

According to Nvidia, the new partner-developed servers that incorporate the modules are based on four specialized reference designs. The reference designs focus on different sets of enterprise use cases.

Two of the reference designs use the Grace Hopper Superchip. They enable hardware makers to build servers optimized for AI training, inference and high-performance computing workloads.

The other two reference designs, which incorporate the Grace CPU Superchip, focus on digital twin, collaboration, graphics processing and videogame streaming use cases.

Nvidia about the hardware ecosystem around its Jetson AGX Orin offering, a compact computing module designed for running AI models at the edge of the network. Jetson AGX Orin combines a GPU with multiple CPUs based on Arm Holdings plc designs, as well as other processing components. It can carry out 275 trillion computing operations per second.

Nvidia today detailed that more than 30 hardware partners plan to launch products based on the Jetson AGX Orin.[3]

References:

- https://news.postuszero.com/news/20763/Nvidia-turns-to-liquid-cooling-to-reduce-big-tech%E2%80%99s-power-consumption.html

- https://wccftech.com/nvidia-a100-pcie-gpu-accelerator-gets-liquid-cooled-offers-same-performance-while-consuming-30-less-energy/

- source: https://siliconangle.com/2022/05/24/nvidia-debuts-liquid-cooled-gpu-data-centers/

Cite this article:

Sri Vasagi K (2022), Nvidia Presents Liquid-Cooled Graphics Chip for Data Centre, Anatechmaz, pp. 302